False positives are the silent nightmare of AI writing. They happen when you craft some text on your own, but then a tool like Turnitin or ZeroGPT flags it as AI-generated. That’s the issue: AI detection false positives, where human writing is mistakenly labeled as AI-generated. In this article, I’ll walk you through the most common mistakes triggering false positives across leading AI detectors – Turnitin, Grammarly, Copyleaks, GPTZero, and Originality.ai. Besides, I will share practical ways to avoid them.

What to Do if You Were Falsely Accused

No AI detector is 100% accurate. Sometimes, they can provide false positive results. If your assignment was flagged as AI-written, but you wrote it on your own, here are several steps to take:

- Try various AI checkers and multiple models to make sure you're clean.

- Don't react negatively to the professor's comments. Take your time to respond in the most intelligent way.

- Review the AI usage policy in your academia

- Collect any notes you did when writing, revision history, etc. You may need this to show that you put a lot of effort into the assignment.

- This is a perfect workflow: write in a Google Document, use JustDone platform for research, writing, AI detection, and more.

- Check the work you submitted before to show that your current assignment is similar

- Send an intelligent and polite response to your professor. Don't be afraid to communicate the information you got, but in a respectful way.

- If you can't resolve an issue with your professor, escalate to your administration. Stand on a fair position.

Don't forget to test your writing with multiple detectors before submitting. JustDone's AI Detector shows you exactly which phrases trigger false positives so you can fix them proactively.

Check your text now and get results in 60 seconds. Try it free with no commitments, no hassle.

What is a False Positive

A false positive happens when the system wrongly labels human-written text as AI-generated. A false negative is the opposite – when AI-written text is mistaken for human-written.

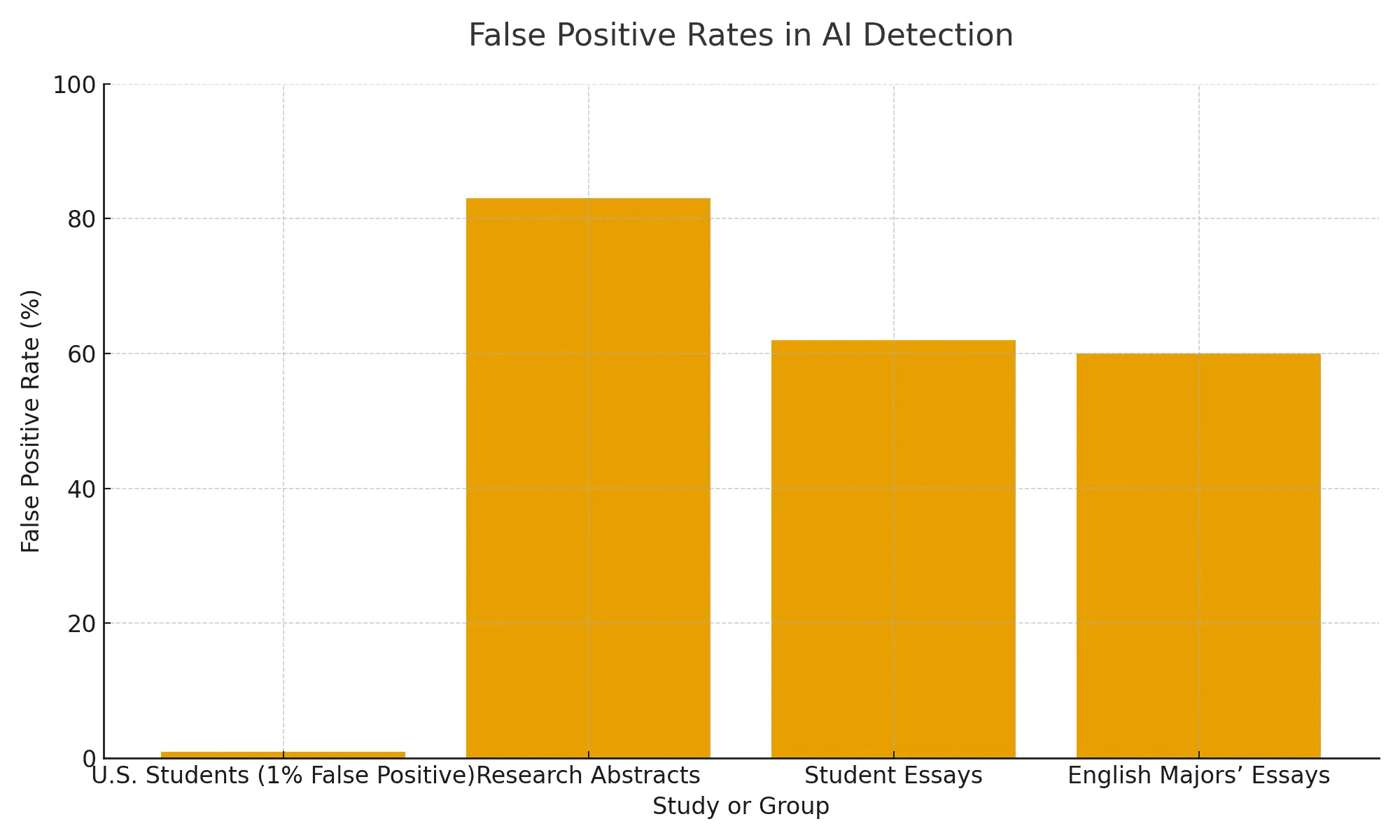

False positives from AI detectors are surprisingly common. In the U.S. alone, over 22 million students submit essays each year. Even a false positive rate of 1% means hundreds of thousands of students could be wrongly flagged for something they didn’t do.

At JustDone, AI detection is focused on accuracy and context, not one-click judgments. It distinguishes real false positives and expected AI traces from normal, mixed authorship workflows.

Here’s how advanced detectors like JustDone interpret different writing scenarios:

- Fully AI-written with no edits means AI-generated

- AI-written and human-edited means still AI-generated (editing doesn’t remove model fingerprints)

- Human-written but heavily AI-edited is detected as AI-generated

- AI-generated outline + human-written + AI-edited is AI-generated

- Human-written with light AI editing depends on how much the AI changed the structure or phrasing

- AI-assisted research, human-written text means human-generated

- Human-written and human-edited means human-generated

An AI outline means using a model to generate ideas, structure, or early drafts. Even if the final text is edited by a person, the structure may still trigger AI patterns. In such cases, the detection result isn’t a false positive, but means hybrid authorship.

Similarly, if ChatGPT writes your text and then you edit it manually, it may still test as AI-generated.

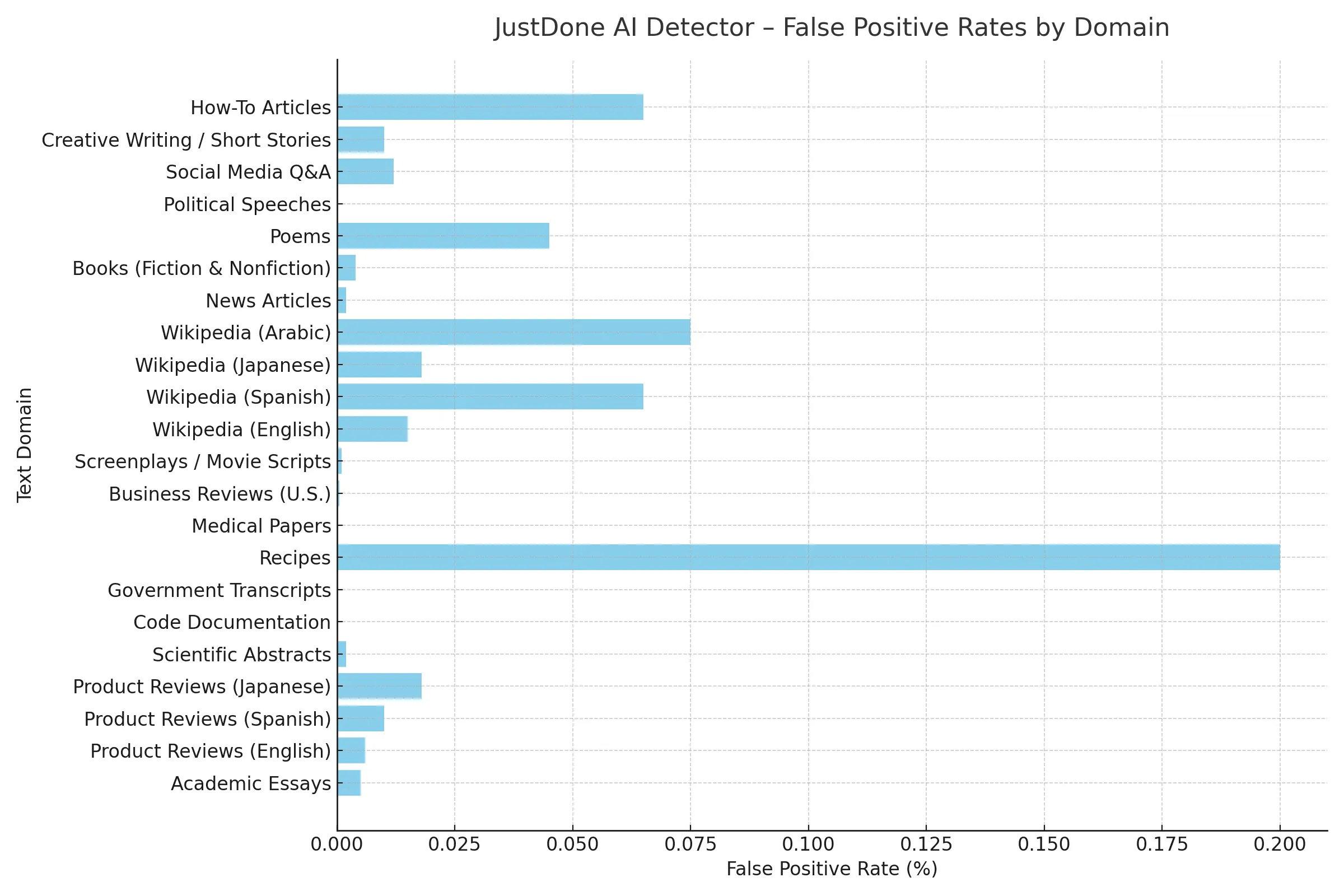

False positive AI detection results depend on the domain. Look at JustDone's AI checker's rate based on the domain:

False Positives on AI Detectors: Comparative Analysis

AI detectors handle false positives poorly. They operate on probability and signal patterns, not an understanding of intent.

Let’s look at how the most popular AI checkers deal with false positives.

| Tool | False Positive Triggers | Best For |

|---|---|---|

| Turnitin | Formal tone, even structure | Academic writing |

| Grammarly | Overuse of rephrasing suggestions | Casual writing, blog content, grammar improvements |

| Copyleaks | SEO-heavy phrasing; blog clichés | Web content, assignments with citations |

| GPTZero | Highly polished grammar and no typos, logical order | School essays, SOPs |

| Originality.ai | Repetitive transitions, passive voice | Content marketing, freelance writing |

These triggers explain why even high-quality human writing can be falsely flagged as AI-generated.

Turnitin AI Detection False Positives

Masjid, an Associate Professor of Politics at the University of Washington, USA, shares the concern that false positives are the major issue of the tool: “We tested Turnitin’s AI detector and found 25% false positives.” Top three triggers:

- Highly formal phrasing and academic diction

Phrases like “subsequently demonstrated” or “the results underscore” are common in scholarly writing; detectors interpret uniform formality as AI. - Even sentence length

AI-generated text tends to be rhythmic and uniform. Mix of short and complex sentences? Signal for originality, but consistent structure? Warning. - Sparse contractions or colloquial language

AI rarely uses “I’ve” or native spoken phrasing. So the absence of colloquialisms can cause false positives.

Advice: Add conversational connectors ("you know," "let me explain"), vary sentence rhythm, and mix complex and simple structures.

How Grammarly Triggers AI Detection

Grammarly isn’t just a grammar checker; its AI detection can raise flags too. Common triggers of Grammarly's AI detector include:

- Heavy reliance on paraphrasing suggestions: Overusing Grammarly’s rephrasing tool creates a texture that mimics AI rewriting patterns.

- Exact industry jargon or templates: Phrases like “touch base offline” or “leveraged best-in-class methodologies” echo corporate-speak, common in AI training sets.

- Unvaried synonyms: Using the same word repeatedly without natural variance can mimic AI.

I recommend using suggestions selectively. Introduce personal voice: add your own examples, anecdotes, and narrative breaks.

Copyleaks AI Detector Pitfalls

Copyleaks is a tool that has been marketed for content originality, but it can also serve as an AI detection tool. It often flags:

- Web-standard language: Phrases frequently found across blogs and guides, e.g., “in today’s digital landscape,” are red flags.

- SEO-like constructs: Repetitive keyword usage for SEO triggers alerts.

- Mixed citations and references: Some tools struggle with in-text citations or footnotes, especially in APA or MLA format.

Using Copyleaks AI detector, vary phrasing, don’t repeat SEO keywords mechanically, use citation methods the detector recognizes, and put references in-text instead of page footnotes. This way, you won't be flagged.

GPTZero False Positives

GPTZero AI checker was built to spot AI text patterns, but it also misfires:

- Chunked logical structure: Clear topic sentences followed by multi-sentence development look like an AI organization.

- Absence of errors: Ironically, writing that’s too clean or too polished may read as machine-produced.

- Uncommon vocabulary: Rare advanced words can register as AI-trained content rather than smart human writing.

It's better to add minor human touches: typos you consciously fix, “uh” while transferring to final copy, or anecdotal asides (“I remember…”). No need to butcher it; small quirks are okay.

Originality.ai and What Trips It Up

Originality.ai AI checker flags text using NLP pattern matching, and it’s sensitive to the following things:

- Repetitive sentence openers: sentences starting with “Additionally,” “Furthermore,” “Moreover,” etc., can signal AI-like structure.

- Passive voice: AI tends to overuse “was/were” phrasing.

- No context or personal qualifiers: Humans sprinkle “in my experience,” “often,” or “really,” showing a personal point of view.

Try to vary sentence openers. Use active voice. Also, it's better to sprinkle context: “In my last job…” or “Based on my experiment…” Personal touch keeps you uniquely human.

How to Reduce False Positives: Actionable Tips

The first thing you need is to make your text sound human. To humanize AI output, start with variety. Mix your sentences: long, short, conversational, active, informal. Read your draft aloud. If it feels mechanical, rewrite a few lines in your own voice.

Add personal anecdotes or reflections. Even a short aside like “I had to rethink my approach” personalizes your work. Small human details reset the detectors.

Be cautious with automated grammar tools. With Grammarly, save a backup draft. After heavy editing, use the original to reintroduce quirks and tone.

One of the most important recommendations is to run multiple checkers, including JustDone AI detector. For instance, if Turnitin flags while GPTZero doesn’t, it’s a clue. Compare results to isolate what’s tripping each tool.

Cite clearly. Put in-text citations in the main body rather than footnotes, which some tools misunderstand. A clear “According to Smith (2020)” is cleaner in AI eyes.

Treat highlights as feedback, not final judgment. Detectors help you refine, not shame. If something gets flagged, review it; unless you’re quoting or genuinely writing well, it may just need more “you” and your voice.

Final Thoughts on AI False Positive Results

AI detection false positives happen, and they can create real stress for students, writers, and professionals. But they’re not verdicts; consider them as nudges instead. I recommend mixing your sentence structure, injecting your experiences, treading carefully with automated edits, and checking with multiple tools. When your writing sounds human, you’ll see those false alarms drop. And as frustrating as they can be, these moments are just reminders to lean into your unique voice.

Readable, human writing should not be an accident, because it is still the intentional requirement of any academic. Detectors don’t know you, so they don’t see your thought process. When your work reflects you, they usually see that too.

So the next time a detector flags your essay, don’t overreact. Read it back, ask yourself if it sounds like you, and tweak until it does.