Key takeaways:

- AI detectors analyze writing patterns, including perplexity and burstiness, to estimate whether content was written by a human or an AI tool.

- No detector is 100% accurate: false positives and false negatives are real risks that can unfairly penalize genuine human writers.

- The best approach is to use AI detectors as one part of a broader content authenticity strategy, never as a sole verdict.

AI content detectors analyze text to estimate whether it was written by a human or an AI tool like ChatGPT or Gemini. They do this by measuring two key signals: perplexity (how predictable the writing is) and burstiness (how much sentence length and structure varies).

This guide breaks down how these tools work, compares the best options on the market, and explains their key limitations, including false positives, false negatives, and the challenge of keeping up with fast-evolving AI models.

Want to check your content right now? Try JustDone AI Detector and get a sentence-level breakdown of what reads as AI-generated and fix it with confidence.

What Are AI Detectors?

AI content detectors are tools that estimate the probability that a piece of writing was created by a generative AI system, such as ChatGPT, Gemini, or Claude, rather than by a human.

They don't read text the way a teacher or editor would. Instead, they scan for statistical and linguistic fingerprints: patterns in word choice, sentence structure, predictability, and rhythm that tend to differ between human and machine-generated writing.

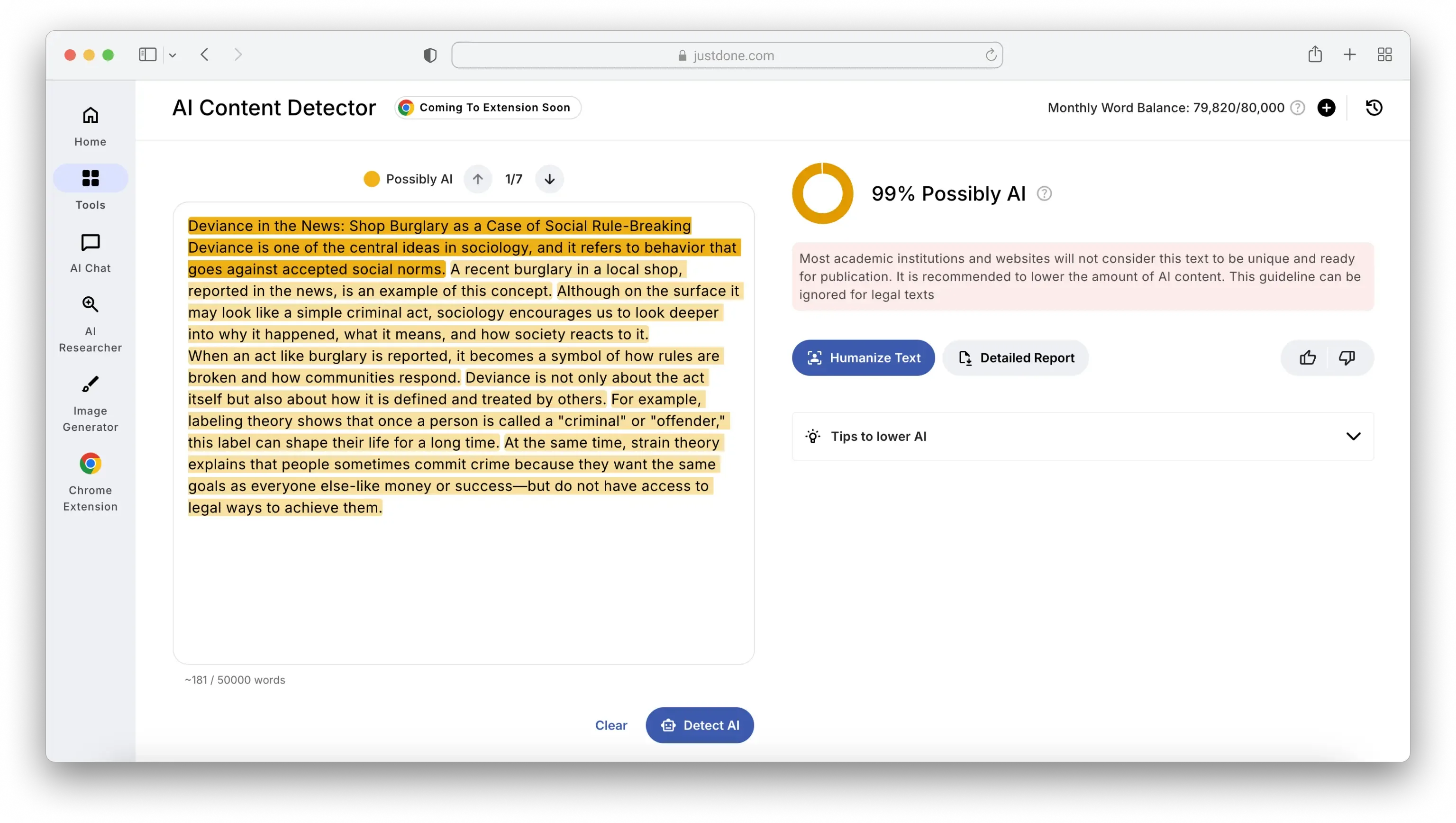

For instance, a GPT detection result may look like this (based on JustDone's output).

AI-generated content becomes more prevalent online. Some estimates suggest up to 90% of online content could be AI-generated by 2026. So, AI checkers have become increasingly important for educators, publishers, recruiters, and content teams trying to verify authenticity.

How Does AI Content Detection Work?

To understand how AI detectors work, you need to know the technologies they're built on. These are the same foundational technologies that power generative AI models themselves.

- Machine Learning (ML)

Machine learning allows AI detectors to recognize patterns across massive datasets without being explicitly programmed with rules. The more examples a detector is trained on (texts labeled as "human-written" or "AI-generated"), the better it becomes at distinguishing between them. ML drives the predictive analysis that underpins perplexity measurement (more on that below) and makes detection possible at scale. - Natural Language Processing (NLP)

NLP is the branch of AI concerned with helping computers understand human language. AI detectors use NLP to analyze not just individual words but the relationships between them: syntax, context, semantic meaning, and sentence structure. While AI can produce grammatically correct sentences, it tends to struggle with subtlety, creative deviation, and the kind of depth that comes naturally to experienced human writers. NLP helps detectors catch these differences. - Classifiers

A classifier is a machine learning model that assigns input to one of several predefined categories. For AI detectors, the two primary categories are "AI-written" and "human-written." Classifiers are trained on large, pre-labeled datasets. They learn to identify features that distinguish the two classes – things like sentence length distribution, vocabulary diversity, and structural regularity. When you paste new text into a detector, the classifier evaluates these same features and places the text on one side of the boundary.

Some advanced tools add intermediate categories. For instance, rather than a binary output, a classifier might distinguish between "AI-generated," "AI-generated & AI-refined," "human-written & AI-refined," and “human-written,” giving users a much richer picture of how content was likely produced. - Embeddings

Embeddings are numerical representations of words and phrases that allow computers to process language mathematically. Words are mapped into multi-dimensional vectors that capture their meaning and context. For example, "king" and "queen" have vectors that are close together in that space, because they share semantic proximity.

The word "bear" maps to different vectors depending on whether it appears in "a bear in the forest" or “bear in mind”, so context changes the embedding. By mapping text into this vector space, AI detectors can analyze semantic coherence, detect unusual patterns of word association, and compare a new piece of writing against the patterns they've learned from training data.

Perplexity: The Predictability Score

Perplexity is one of the most important metrics in AI detection. It measures how predictable a piece of text is or, put differently, how "surprised" a language model would be by the word choices and sentence structures it encounters.

Low perplexity is equal to highly predictable text. The words and structures are exactly what you'd expect to come next. This is characteristic of AI-generated writing, which optimizes for coherence and readability.

High perplexity is equal to more unpredictable text. The writing surprises you with unexpected word choices, unusual metaphors, or unconventional structures. This is more typical of human writing.

Here's how perplexity plays out in practice:

| Example Sentence | Perplexity Level | Why |

|---|---|---|

| The sky is blue. | Low | Extremely common and predictable. |

| The sky outside was a soft shade of silver before the storm. | Medium-low | Less predictable but still natural and clear. |

| The sky is remembering the rain we never had. | Medium | Poetic and metaphorical — more human-feeling. |

| I would love a bowl of grasshoppers jumping. | High | Grammatically odd and highly unexpected. |

Generative AI aims to produce text with low perplexity because it's optimizing for the most likely next word at each step. Humans, by contrast, make creative leaps and occasional errors, all of which raise perplexity.

That said, perplexity alone isn't a reliable signal. Some human writers, especially those writing in a formal, technical, or procedural style, naturally produce low-perplexity text. This is one of the reasons detectors can flag formal human writing as AI-generated.

Burstiness: The Rhythm of Human Writing

Burstiness measures variation in sentence length and structural complexity across a piece of text. Human writing naturally has high burstiness: we mix short punchy sentences with longer, more complex ones. We shift register, break rhythm for emphasis, and build and release tension through sentence structure.

AI writing tends to have low burstiness: sentences are consistently medium-length, structured similarly, and flow with an almost metronomic regularity that can feel smooth but lifeless.

| Sample Paragraph | Burstiness | Explanation |

| I woke up late. The sky outside was a mix of orange and gray, a sign of the storm approaching. Groggily, I made my way to the kitchen, grabbing a cup of coffee to shake off the sleepiness. It was going to be a long day, I could already tell. | High | Sentence lengths vary. Uses adverbs, present participles, and varied rhythm. |

| I woke up late in the morning. The sky outside was a mix of orange and gray. I made my way to the kitchen. I grabbed a cup of coffee. I could tell it would be a long day. | Low | Sentences are consistently short and simple. Monotonous structure throughout. |

Perplexity and burstiness work together. A text with high burstiness tends to also have higher perplexity, because sudden shifts in sentence length make the next word harder to predict. Low burstiness usually correlates with low perplexity, since uniform sentences make patterns easy to anticipate.

AI detectors look for the balance of perplexity and burstiness that reflects natural human expression. Too little of either is a red flag.

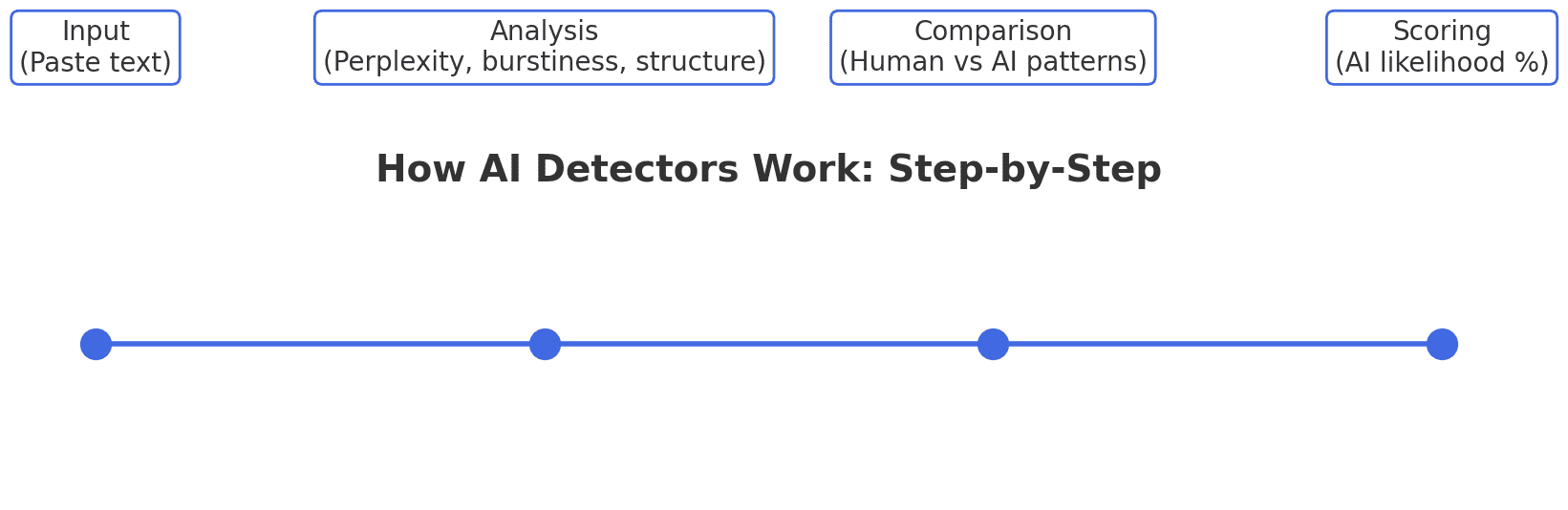

The Detection Process Step by Step

Here's what happens behind the scenes when you paste text into an AI detector like JustDone's:

- Input. You paste your text and hit “check.”

- Tokenization. The detector breaks your writing into tokens — small units of language (words, subwords, punctuation) — that it can analyze computationally.

- Feature analysis. The system examines multiple dimensions of your writing simultaneously: sentence length distribution, vocabulary frequency, structural patterns, perplexity, burstiness, repetition, and semantic coherence.

- Embedding comparison. Your text is converted into vectors and compared against the patterns the model has learned from its training data — examples of both human-written and AI-generated text.

- Classification. The classifier evaluates all these signals and places your text on the spectrum between "AI-generated" and “human-written.”

- Scoring. You receive a score or percentage indicating the likelihood of AI involvement, not a definitive verdict, but a probability estimate.

Want to try it yourself? Run your content through JustDone's AI Detector to see a sentence-level breakdown of which parts read as AI-generated and get actionable guidance on how to make your writing sound more authentically human.

AI Detectors vs. Plagiarism Checkers

These two tools are often confused, but they serve fundamentally different purposes. Let's see the differences in the table.

| AI Detector | Plagiarism Checker |

|---|---|

| What it detects | Whether text was likely written by AI |

| How it works | Analyzes writing patterns, predictability, and structure |

| Detection focus | AI-generated content |

| Output | Probability score (e.g., "73% AI-generated") |

| Reliability | Probabilistic, may produce false positives/negatives |

| Best for | Checking if writing feels machine-generated |

There's one important overlap: plagiarism checkers sometimes flag AI-generated content as plagiarism, because generative AI draws on training sources it doesn't cite. The model may occasionally produce sentences that closely mirror existing text without attribution. This is why using both tools together gives you a more complete picture.

How Accurate Are AI Detectors?

Accuracy varies significantly across tools, and no detector is perfect. Here's how the leading AI detectors colleges use compare:

| Tool | Reported Accuracy | Notes |

| JustDone | 94% | Explains why sections are flagged and allows humanization in one click |

| Turnitin | 86% | A golden college standard for Plagiarism checking |

| GPTZero | 95,7% | Well-known free and paid detector; performs reliably on clear AI content but can vary based on text type and editing |

| Copyleaks | 99% | Marketed as very high accuracy with low false positives (nearly 0.2%) |

| Originality.ai | 95-99% | Some independent tests show very high accuracy on standard AI text. |

Several factors affect how reliable any detector will be:

- Text length: Short passages offer fewer patterns to analyze. Longer texts give detectors more signal to work with.

- AI model sophistication: Newer AI systems produce increasingly human-like text, making them harder to detect.

- Human editing: AI-generated content that has been substantially revised by a human becomes much harder to identify.

- Writing style: Creative, unconventional, or highly technical writing can confuse detectors trained on more standard prose.

- Language: Most detectors are trained primarily on English-language data and may perform less reliably on other languages.

Limitations: Why AI Detectors Aren’t Perfect

While AI content detectors provide helpful insights, they're not foolproof. These tools help identify AI-assisted work, but false positives can unfairly penalize students or writers. Understanding where AI detectors fall short is essential to using them responsibly.

False Positives

A false positive occurs when a detector flags human-written content as AI-generated. This is perhaps the most damaging limitation — it can unfairly penalize students, writers, and professionals. Common causes include:

- Formal or highly structured writing styles

- Writers who are not native English speakers (whose writing patterns may differ from the detector's training data)

- Technical or procedural content with naturally low burstiness

- Writers whose style is particularly clean, logical, or polished

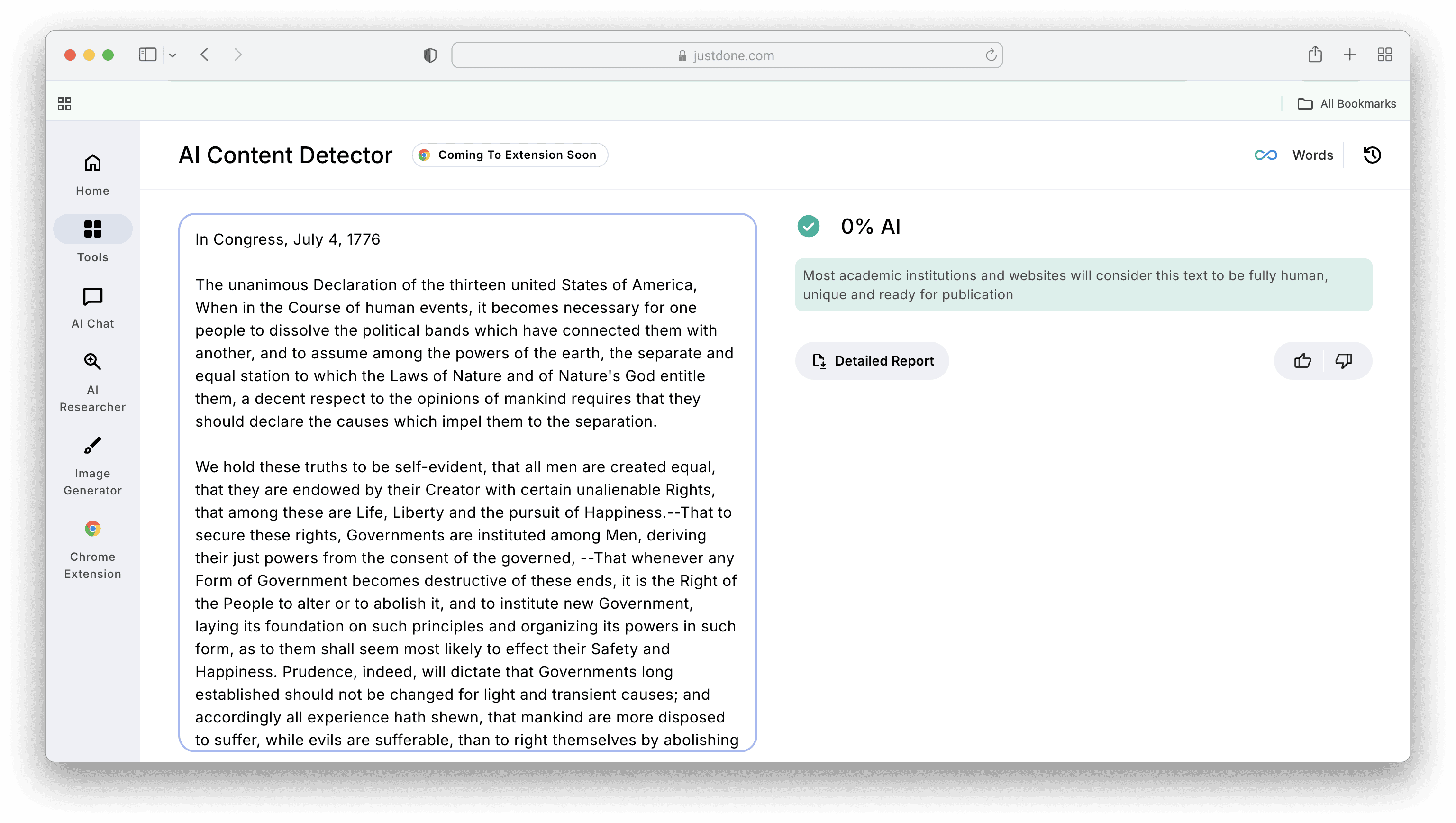

Historical documents are a striking example of how problematic false positives can be. The Declaration of Independence, written entirely by human hands in 1776, has been flagged as AI-generated by some detectors, simply because its formal, structured prose resembles what detectors associate with machine output.

However, advanced AI checkers detect historical materials and texts made before AI with 0% AI probability.

That's how JustDone AI Detector checks the Declaration of Independence.

That's how JustDone AI Detector checks the Declaration of Independence.

False Negatives

A false negative occurs when a detector fails to identify AI-generated content. As AI models become more sophisticated, they produce text that is increasingly difficult to distinguish from human writing. Someone who prompts a model to mimic a conversational style, introduce deliberate errors, or vary sentence length can often fool basic detectors.

Evolving AI

Generative AI is improving faster than detection methods. Today's detectors are trained on outputs from current models, but new models are released regularly, and detection systems inevitably lag behind.

Context Blindness

Detectors analyze patterns, not meaning. They can't understand intent, assess whether an argument is coherent, or evaluate whether evidence is cited appropriately. A piece of writing can score "human" while being completely plagiarized, and score "AI" while being entirely original.

The best recommendation is to use an AI detector as part of a broader verification strategy and combine it with your own critical thinking to ensure accurate and fair results.

Best Practices to Work with AI Detectors

Over years of working with universities, research teams, and content platforms, a clear set of best practices has emerged for getting the most out of these tools.

First, scan early, not just at the end. Don't wait until your work is finished to run a detection check. Mid-draft checks help you notice when your writing has shifted toward the predictable, even-toned patterns that detectors flag, so you can course-correct while revising, not scrambling at the last minute.

Second, never treat a score as a verdict. A high "AI likelihood" score is a signal to investigate further, not proof of wrongdoing. Always combine detector output with human judgment, context, and other verification methods.

Third, cross-check with multiple tools. Different detectors have different training data and thresholds. Using more than one gives you a broader, more reliable signal. Combine AI detection with plagiarism checking for the most complete picture.

Fourth, build a writing audit trail. Keep your drafts. Use document version history. If you use AI for brainstorming or rephrasing, document that too. When a detector flags your work, a clear revision history is often more persuasive than any counter-argument.

Fifth, run calibration tests. Take one of your own essays and a fully AI-generated version. Run both through detection tools. This teaches you how structure, sentence variety, and tone affect detection scores and often reveals simple adjustments (breaking up repetitive sentence patterns, varying vocabulary) that can reduce a score without changing your ideas.

Sixth, be transparent. Whether in academic or professional settings, communicate clearly about how and when AI tools were used in your workflow. Transparency builds trust and reduces the risk of misunderstanding.

Try JustDone AI Detector as your go-to tool. It doesn't just flag content but explains which sentences are triggering the AI signal and why, giving you actionable guidance to improve your work while keeping your authentic voice intact.

Beyond AI Text Detectors Work

I’ve seen AI detectors save students from accusations. I’ve also seen them cause chaos when misunderstood. At their best, they’re tools that teach us about our own writing habits and where we sound too polished, too generic, or too formulaic.

My advice, always: Don’t fear the tools. Master them. Use them to refine your tone, defend your originality, and grow as a writer, but not to pass some invisible test.

And if you need a tool that doesn’t just say “AI detected,” but actually helps you improve, JustDone’s AI Detector is the one I’d recommend. It’s what I use to coach students and professionals alike because it guides, not just judges.

F.A.Q.

How do AI detectors detect AI?

AI content detectors examine writing patterns, sentence construction, and stylistic signals to assess how likely a text is AI-generated. They rely on machine learning models trained on extensive collections of both human and AI-produced content, but their results are probabilistic and can differ in accuracy.

How accurate are AI detectors?

No AI detector is 100% accurate. Accuracy varies significantly by tool and context. JustDone AI Detector demonstrates 94% score with sentence-level analysis. Because no tool is perfect, we recommend using AI detection alongside plagiarism checking and human review, but never as a standalone verdict.

Can AI detectors detect ChatGPT?

Yes, most AI detectors are trained on ChatGPT outputs and can identify text generated by GPT-4, GPT-5, and similar models with reasonable accuracy. Advanced detectors like JustDone's are continuously updated to keep pace with new model releases, but some can lag between model advancements.

What words trigger AI detection?

There are no specific “trigger words” that automatically flag text as AI-generated. Words like tapestry, elevate, crucial, or enhance don’t cause detection on their own. AI detectors analyze overall writing patterns, structure, tone consistency, and predictability, not individual words.

Why do AI detectors flag human content?

AI detectors analyze statistical patterns, not intent or meaning. Human writing that is formal, structured, repetitive, or unusually polished can share enough characteristics with AI-generated text to trigger a false positive. Writers who are non-native English speakers are particularly vulnerable, as their writing patterns may fall outside the range of the detector's training data. This is why detectors should always be used as one input in a broader assessment, never as a final judgment.