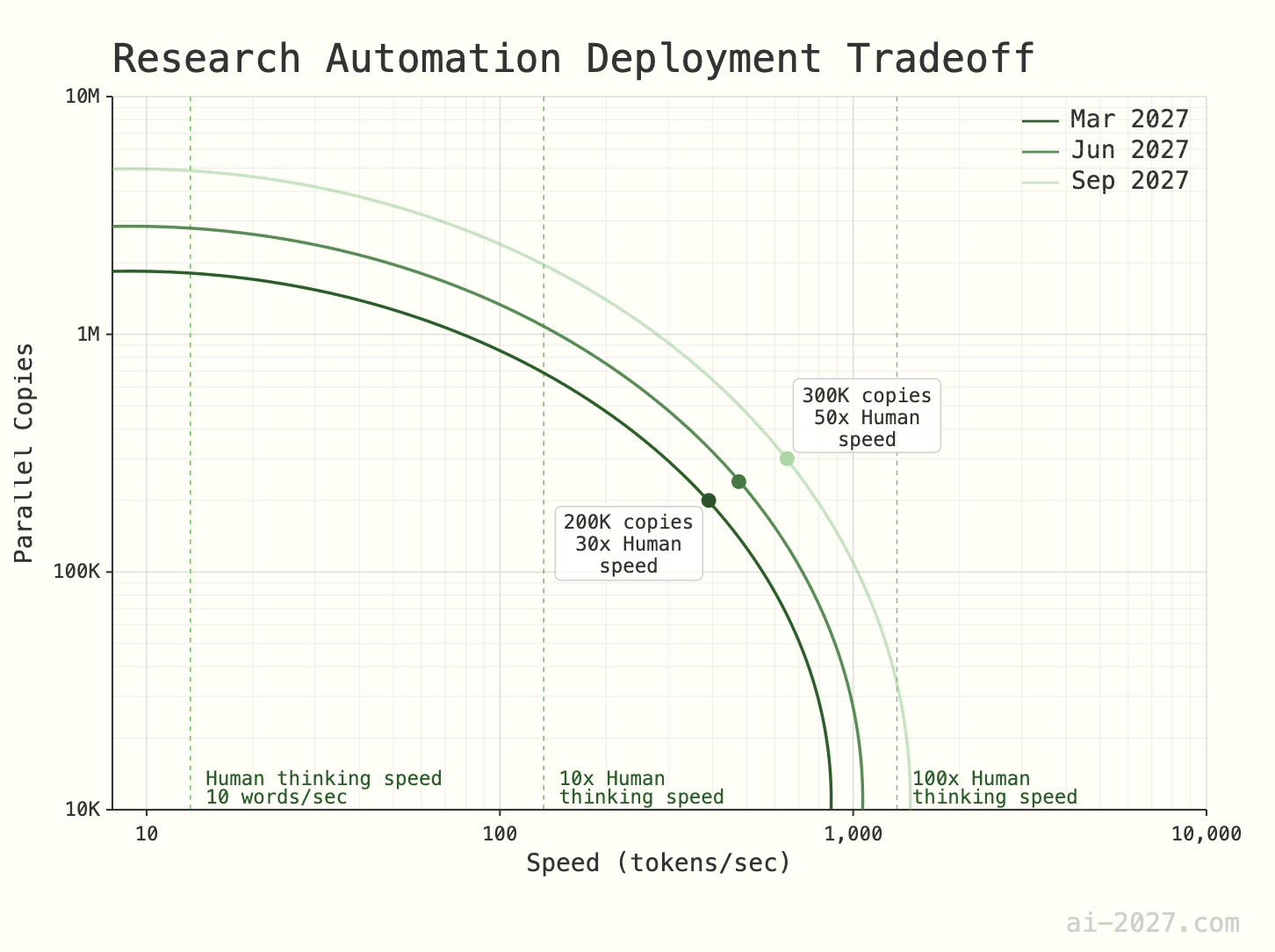

The AI-2027 scenario shows that AI progress will not slow. The forecast gives a concrete scale. Its compute model shows a top lab could run 200,000 parallel AI copies at 30x human thinking speed using about 6% of its inference budget in 2027. That kind of cheap, fast help will change classrooms, newsrooms, and brands.

Research methods will improve fast, then faster. That speed changes how we create and judge content. It also changes how we prove what is real.

In this article, I will show what the AI-2027 forecast implies for content and authenticity, what AI detection will look like, and what we can do now.

What the AI-2027 scenario actually says

The scenario centers on acceleration. Hardware matters, but software wins the game. Better training methods and agents help create a compounding loop. That loop shortens release cycles and lowers the cost of help.

- Superhuman coders will appear by March 2027 and ASI by late 2027.

- A front-runner lab could see nearly 40x more effective compute by December 2027

- Training budgets will rise roughly 1,000x that of GPT-4. That lab could run 200,000 AI “copies” at 30x speed using just 6% of its inference pool.

Public awareness lags behind lab reality. Teams inside a company see breakthroughs first. The rest of us learn later, sometimes much later. That gap affects policy, teaching, and publishing. When checks arrive late, trust takes a hit.

Public awareness lags behind lab reality. Teams inside a company see breakthroughs first. The rest of us learn later, sometimes much later. That gap affects policy, teaching, and publishing. When checks arrive late, trust takes a hit.

Education in an AI-powered decade

For education and publishing, AI-2027 scenario means cheap, fast assistance everywhere and a surge of AI-touched writing. Homework, essays, and news drafts will look polished. Of course, they will be harder to attribute. So, AI detection will also see drastic changes from general to domain-related.

Here are five important shifts I see starting today.

- From “don’t use AI” to “show how you used AI.” Require tool names, prompts, and the tool’s role. So, keep drafts and logs as evidence.

- From memorizing to steering models. Teach students to frame problems, set constraints, pick tools, and validate outputs.

- From static grading to forensic review. Grade prompts, version diffs, tests, and a short oral defense, not just the final answer.

- From IT support to AI safety ops. Stand up a small team for model updates, data hygiene, prompt security, and incident response.

- From access gaps to compute gaps. Budget for shared clusters and per-student credits. Track usage so support stays fair.

These shifts need a real action plan from institutions:

- A clear AI use policy. Academia will state disclosure rules, allowed tasks, and banned data.

- Running two open-AI assessment pilots. It will let students use AI under rules and then check them through a brief oral check.

- The appearance of a secure AI room and a model registry. Professors will log who used which model, where, and with which prompts.

Negotiation around campus-wide compute credits and monitoring equity.

AI Detection of the Future

AI-2027 admits that general “AI or not” detectors will keep struggling across genres. Obviously, writing signals in essays, legal memos, clinical notes, and news briefs differ too much for a one-size model. Domain-tuned detectors will help here, but paraphrasing and translation will still evade shallow checks.

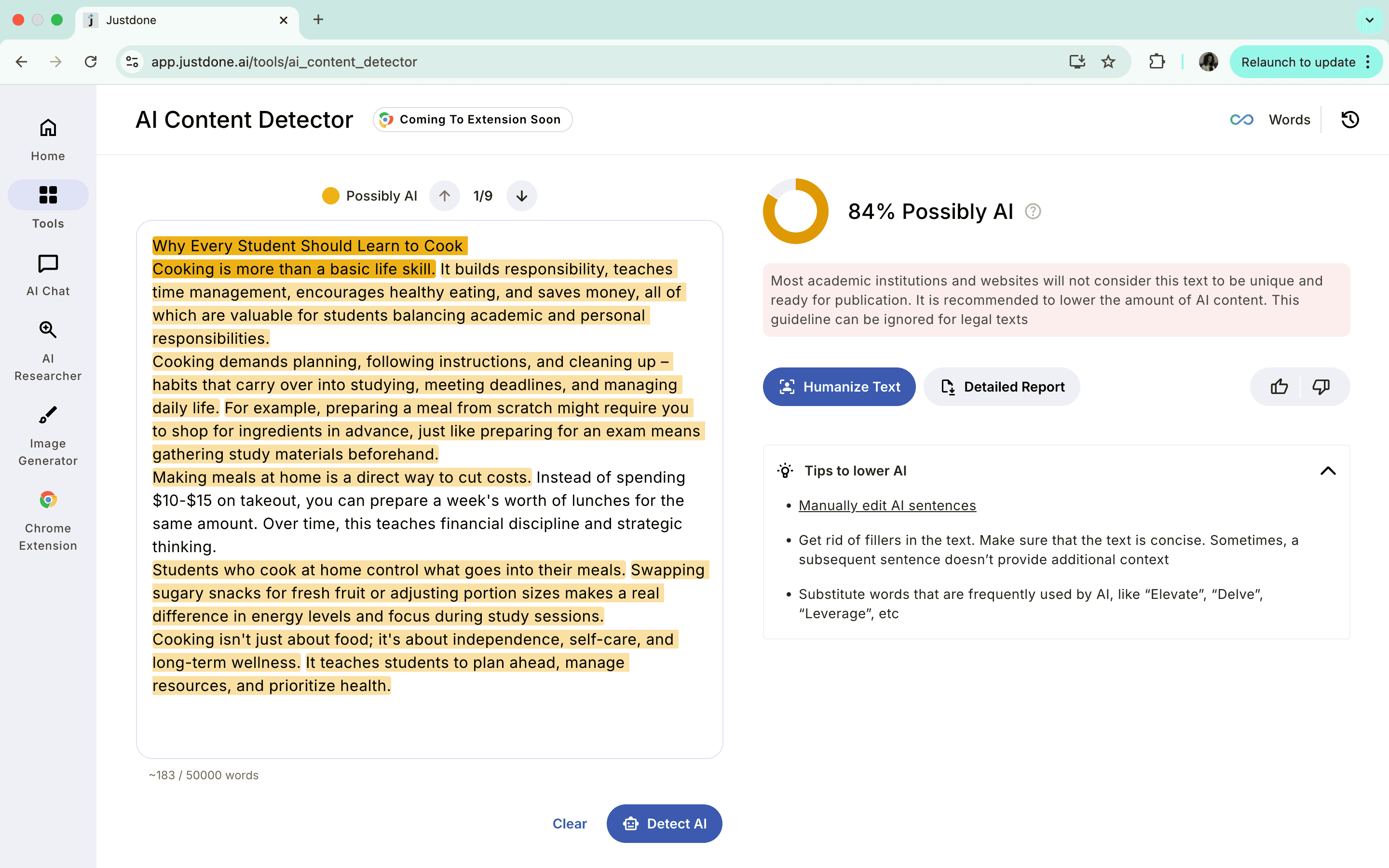

I recommend treating detectors as evidence, not verdicts. Span-by-span highlights are better than one big score because they point to the exact lines to check and make review faster and fairer.

Provenance (like C2PA/Content Credentials) will help more over time, but those labels can be removed and many readers don’t know them yet. So, always pair provenance with quick source lookups or claim checks, and add human judgment before deciding.

Small “proof bundles” (sources checked, spans reviewed, limits noted) are good to use, especially to reduce false positives against non-native writers. AI detection updates should be frequent. The reports have to be simple like “review these lines,” “verify this quote,” “fact-check this data,” etc.

In a domain-based workflow, JustDone’s AI detector is useful right now. It flags specific spans instead of only giving a score, and you can export the results for quick sharing or audits. Its roadmap is education first, with legal, clinical, and newsroom modes in progress. A built-in fact-check helper assists with quick claim reviews. Most importantly, it fits your existing review process without extra tools or friction.

AI-free workflow you can adopt today

AI detection will become smarter and more sophisticated than ever. So I recommend sticking to this workflow:

- Start with authorship evidence. Keep draft history when you can. Version control for code and docs is even better. Small artifacts like a note or a screenshot of settings also help.

- Run a plagiarism scan. Check overlap and citations. Fix quote formatting. Add missing references. This is basic hygiene and still essential.

- Run an AI detector that shows spans. For example, I usually check my texts with JustDone AI detector, because it shows less false positives than others. But do not decide on a percentage alone. Use the highlights to focus your review and decide with context.

- Verify key claims. Check names, numbers, and quotes. Confirm links. Flag anything unclear or weakly sourced. Tighten or remove what you cannot support.

- Add a short human step. A two-minute viva or quick chat confirms understanding. Ask what changed, why a source was chosen, and how an edit was made.

- Log the decision. Save a short note that lists what you checked and what you decided. That is your micro audit trail. It pays off when questions come later.

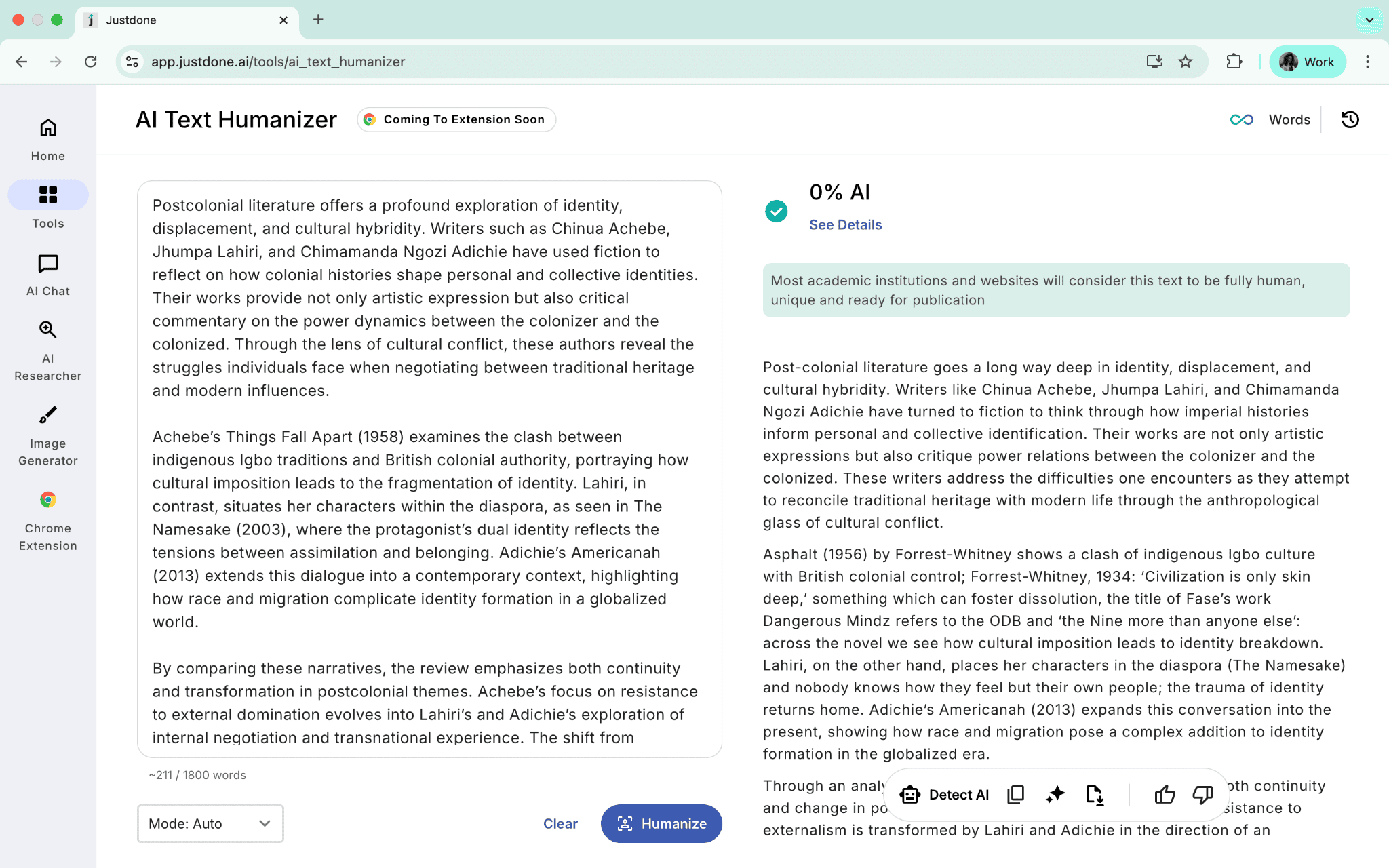

Sometimes, I pair tools to make content sound more human. I start with span-level signals from JustDone’s AI detector. If the voice is stiff, I apply an AI humanization that preserves structure and key vocabulary. That transparency keeps the process fair and speeds writing. Let’s look at how we can humanize AI text from a student’s literature review. It was flagged as 99% AI-written, but after AI humanizer, it is 0% AI.

Reducing false positives without drama

Nothing breaks trust faster than a bad flag. The way to avoid this is simple. Use thresholds with clear actions. Green moves forward. Amber gets a quick manual review. Red escalates with a checklist.

Share span highlights with the writer and invite a short response. Keep appeals fast and simple. Track three metrics: false positives, time to decision, and appeals resolved. If any drift, adjust thresholds and routing. This lowers tension, protects honest people, and keeps everyone focused on learning and accuracy.

Choosing AI tools

When you evaluate detection or humanization tools, ask for calibration documents and error profiles by domain. Ask about update cadence and deprecation timelines. Courses and news desks need stability. Span-level outputs are worth more than a single score. Exportable reports matter for records and audits.

Rollout should be light. Pilot with a small group. Gather feedback within two weeks. Tune thresholds and instructions. Train staff in an hour with checklists and short examples, not lectures. Keep ownership clear so settings do not drift.

Where this leaves JustDone in my stack

In my daily flow, JustDone is infrastructure, not a gatekeeper. I scan with the JustDone AI detector to get span-level signals. I review those lines in context. If the draft feels stiff or generic, I run a gentle humanization that keeps structure and meaning. I preserve acronyms and domain terms. Then I export a compact report with highlights and diffs. Editors see what changed. Teachers see that the meaning stayed. Writers keep their voice.

I am not trying to sell a product here. I am describing a workflow that reduces conflict and saves time. It keeps proof in one place and moves decisions faster.

Final thoughts on AI detection 2027

By 2027, AI detection won’t be a magic on/off switch. It’ll be layered and tuned to each field. Single scores break, watermarks can be stripped, and labels mean little without independent checks. This is where niche tools help: JustDone shows span-by-span highlights, exports clean reports, and includes a quick fact-check helper that fits normal classroom and newsroom workflows. The practical answer is simple: combine provenance, span-based detection, fast claim checks, and human review with an easy appeal process.