Key takeaways:

- ESL writing gets flagged because detectors expect native-English patterns.

- Translation and free humanizers usually make AI scores higher, not lower.

- Use a clear workflow and better tools to keep your writing natural and human-like.

AI detection tools were created to spot writing that looks like it was produced by a machine. But most of these systems were trained mainly on English and mainly on writing produced by native speakers. This creates a real challenge for ESL (English as a Second Language) students. Your writing may be original, but the detector may still flag it because it doesn’t “fit” the English pattern it expects.

In this guide, you’ll learn the reasons why your writing may be detected as AI-generated and what to do to sound more like a native.

Why ESL Writing Gets Misread by AI Detectors

All AI detectors work by measuring patterns: predictability, rhythm, and structure. This becomes a problem because, as an non-native writer, you may naturally write:

- shorter sentences

- simpler or more predictable grammar

- fewer idioms

- more direct structure and translation logic from another language.

Detectors often mark these features as “AI-like", as if the writing is too simple or too predictable. This doesn’t mean the text is fake - it only shows that the model was never trained to understand how non-native speakers write.

So when detectors check your work, they see pattern overlap, not intent. The detector isn’t reading meaning. It’s reading statistics.

Another issue is cultural writing style. Different languages also have different writing styles.

Some languages prefer long, flowing sentences; others use more passive voice; others avoid subjective statements. AI detectors don’t understand any of this. When they see something unfamiliar, they mark it as suspicious.

The Translation Myth: Why It Makes AI Detection Worse

Many students hope that translating their essay through multiple languages to dodge AI detection. But this method rarely works. In fact, repeated translation often makes your writing more detectable.

What usually happens after translating text through several languages:

- the writing becomes overly smooth and flat

- sentence length becomes uniform

- natural idioms disappear

- predictable grammar increases

Every one of these traits increases your AI score. Machine translation is structured and formulaic – exactly what detectors look for. Even if the original text was human, translation can make it sound machine-produced.

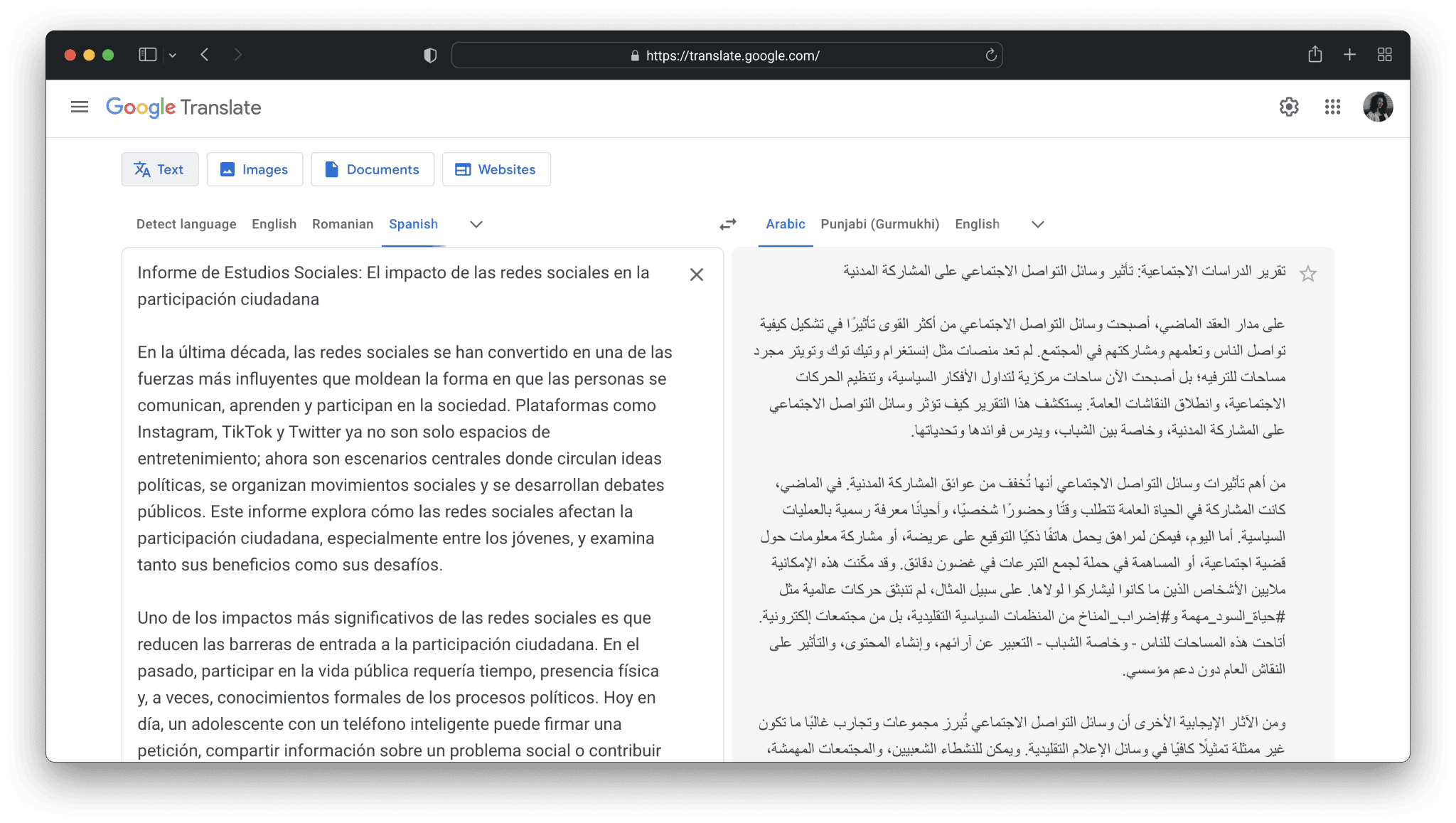

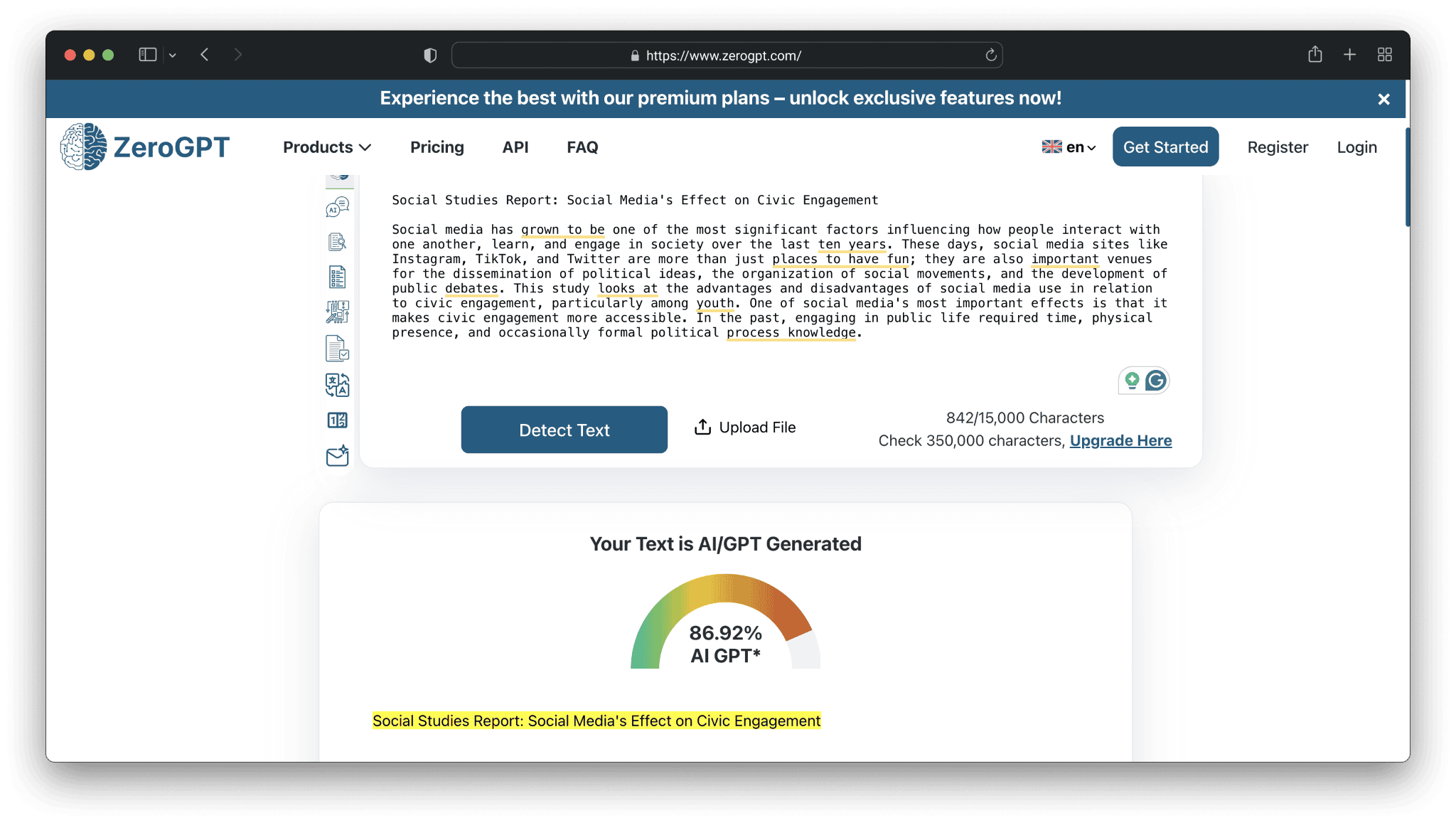

Let’s see the results of such detection after three times translation of a social studies report. First, it was written in English and translated into Spanish via Google Translate. Then, it was translated from Spanish into Arabic.

Finally, the text was translated from Arabic into English again.

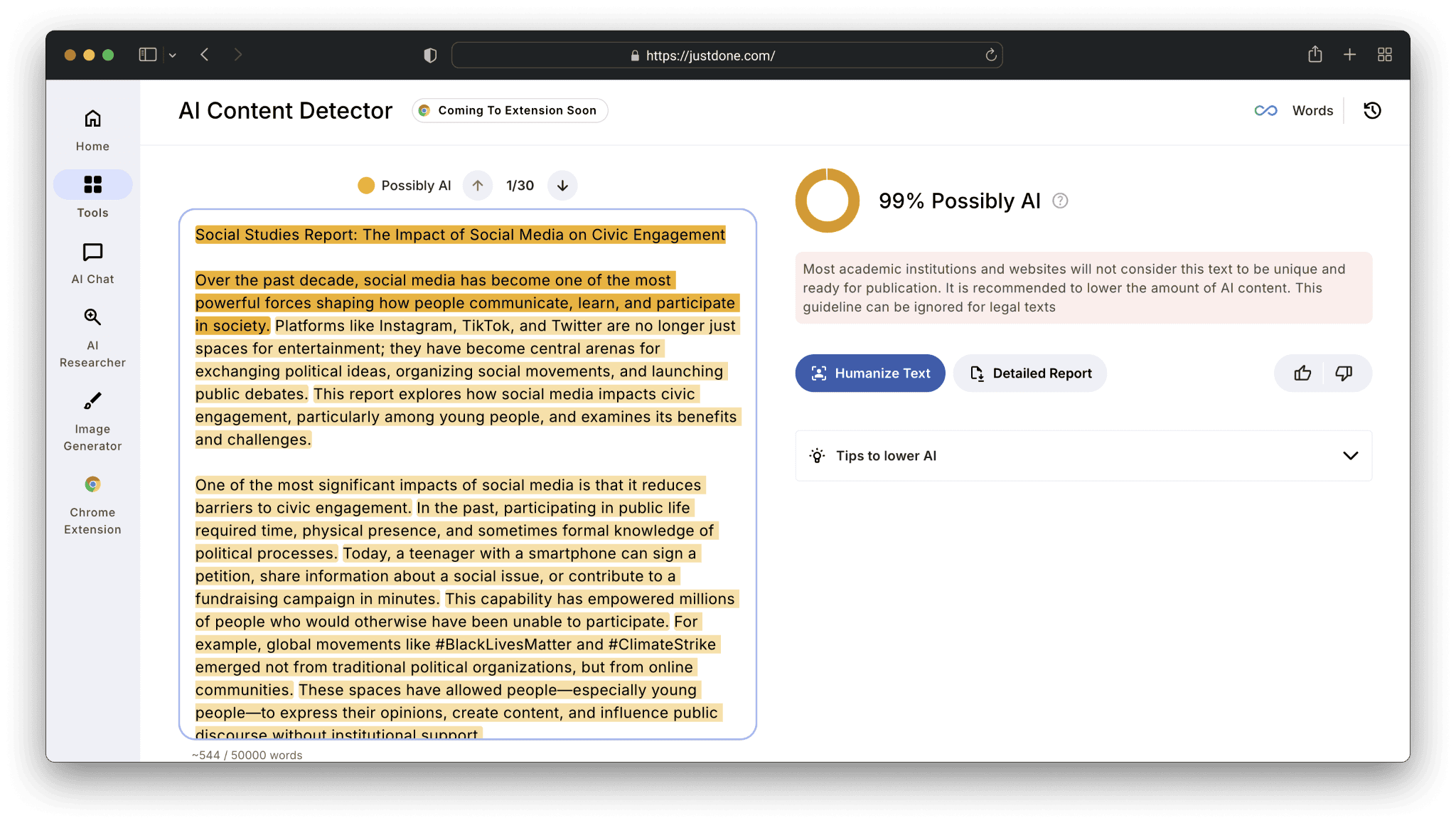

I put the result of this translation into JustDone’s AI detector. The text was flagged as 99% AI-generated.

So, the trick with multiple translations doesn’t work. Don’t rely on translation tools to avoid being flagged, try to work with your tone of voice to make writing less suspicious for AI checkers.

Why Free Humanizers Make Things Worse

When ESL students can’t rely on translation, many turn to free “AI humanizer” tools. Unfortunately, most free tools use tiny language models that rewrite text in a bland, generic academic style.

Typical problems with free online humanizers include these issues: grammar becomes too perfect, all sentences become similar in length, the tone becomes robotically formal, and personal style disappears.

AI detectors read this as too polished to be written by a human. Because free tools remove the small mistakes and imperfections that make human writing human, your chances of being flagged actually increase.

You can test this easily: run your text through a free humanizer and then check it with any detector. The AI score usually jumps.

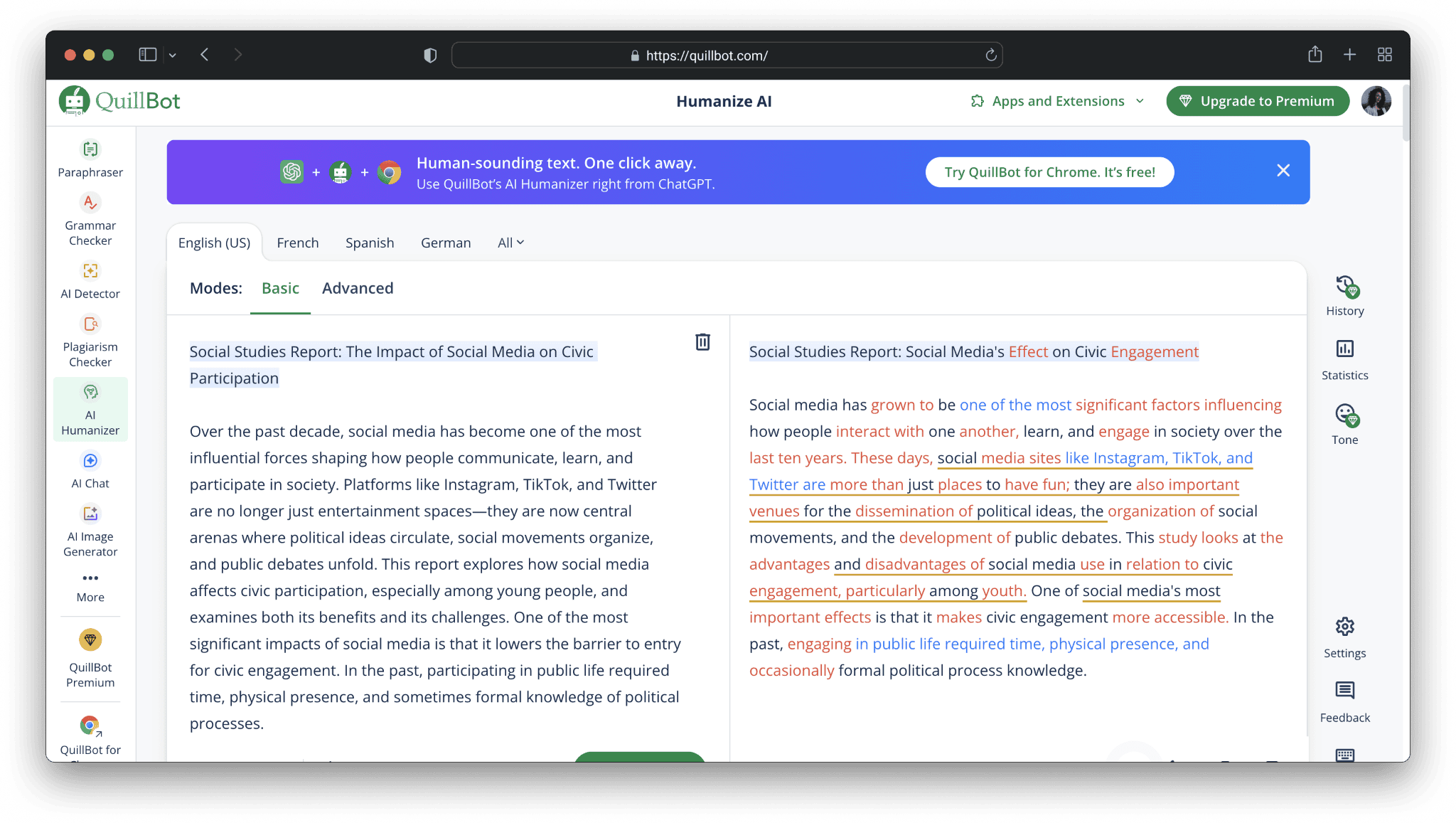

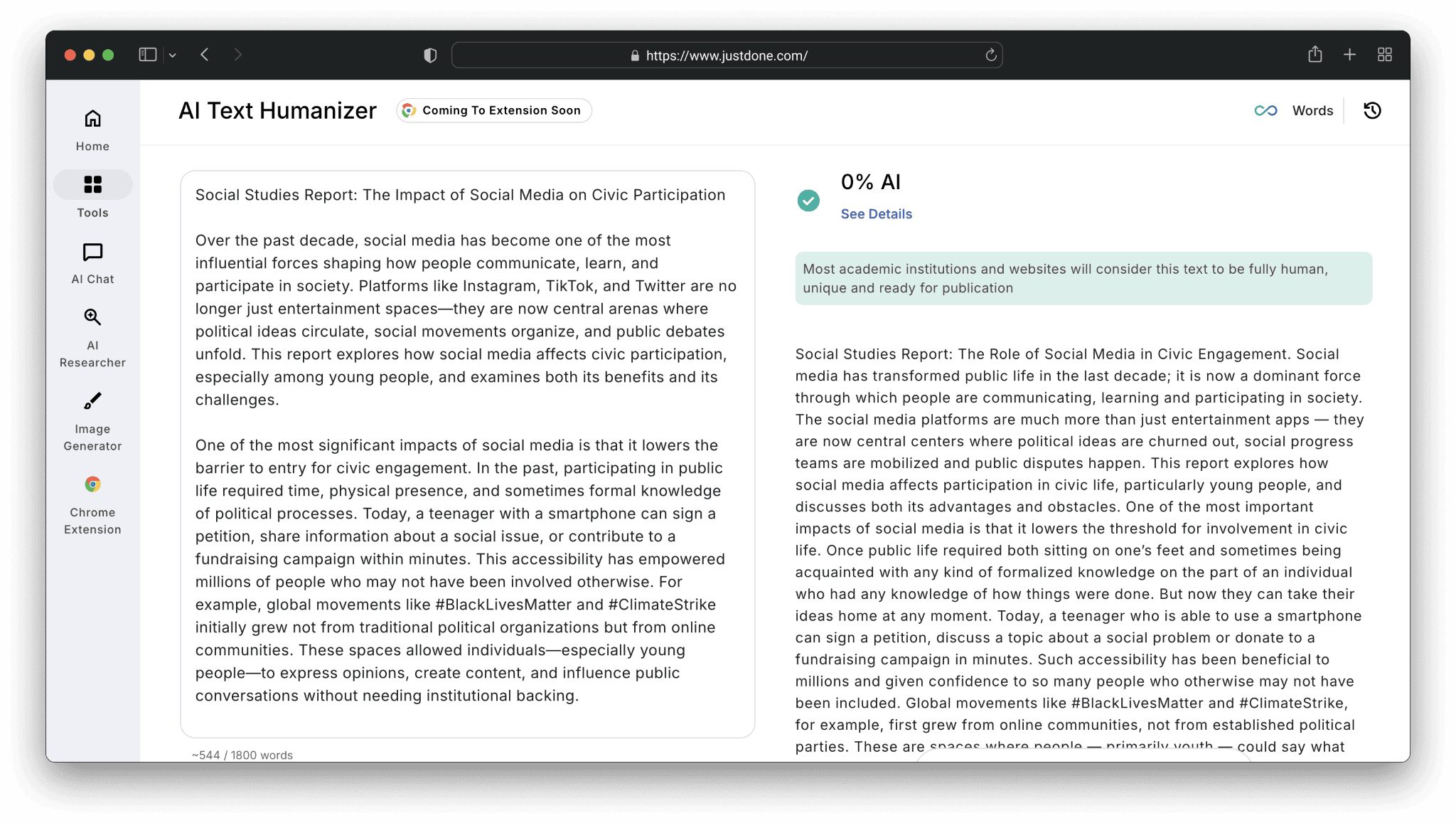

For instance, I humanized a student’s report on social studies and got the following output:

Then, I ran it through advanced mode of JustDone’s AI detector and got a 100% AI-detected result:

I used another AI checker, but it still showed that most of the text was heavily AI-detected.

As a result, I can conclude that humanizing AI text via a free public tool can’t be so accurate and not trigger AI detectors.

What Actually Works Better for Multilingual Writing

Some tools are built differently. Paid solutions (including JustDone and others) don’t rewrite your text into AI-like perfection. Instead, they try to preserve your rhythm, small imperfections, phrasing choices, and your multilingual logic.

This is why the AI score usually goes down; not because the tool hides AI patterns, but because it brings the writing back to human variation.

Why higher-quality tools do better:

- they use multilingual datasets

- they measure sentence behavior, not just word statistics

- they don’t force native-speaker grammar into every sentence

- they allow natural variation instead of flattening your voice

When an ESL student rewrites a paragraph using a better humanizer, the result usually reads like their real writing, but just clearer and more natural.

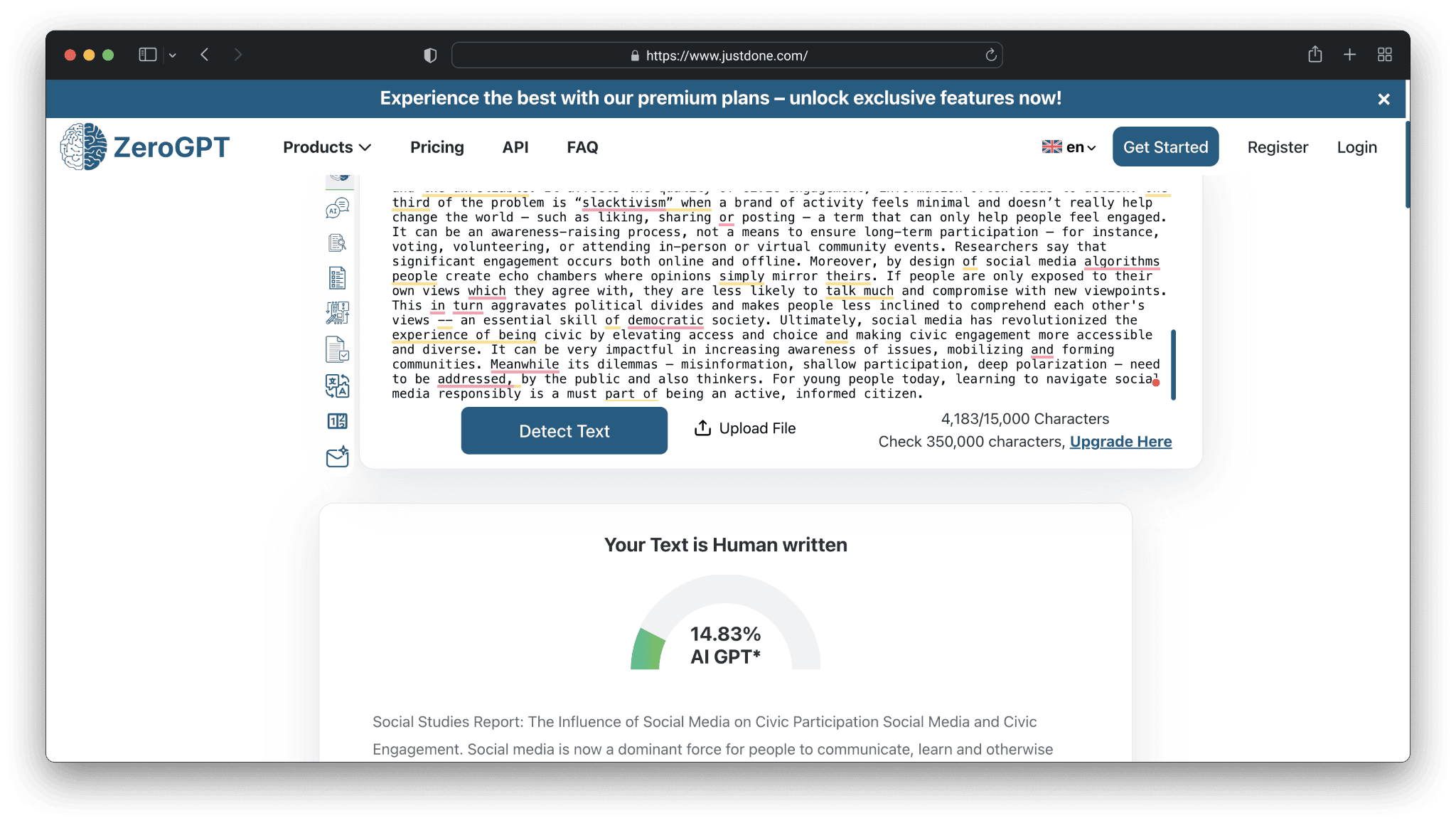

For instance, when I put the social media report that we already analyzed above into JustDone’s AI humanizer, selected “Sound human” mode, I got 0% AI-detected output.

However, don’t forget that no detector is 100% accurate. The results from different checkers may vary greatly. For instance, the same output is flagged as 14,83% AI-generated by ZeroGPT.

So, treat AI humanizer as your helper and direction. Always double-check the structure of its output (headings, sentences), even if it shows 0% AI text.

A Safe AI-Detection Workflow for ESL Students

There is no single trick that guarantees a “0% AI” score. But there is a safe workflow that reduces false flags and keeps your writing authentic.

Start by keeping your early drafts, notes, and screenshots of your progress. If you ever need to prove authorship, this evidence helps more than anything else.

When editing, avoid translating your whole paper into another language. It usually makes the text look machine-generated. If some sections sound stiff or too formal, rewrite just those parts — don’t run the whole document through a rewriting tool.

It also helps to test your work in smaller pieces rather than uploading the whole essay. This lets you locate exactly where the detector sees a problem.

If one detector shows extremely high AI scores while another shows low scores, this is likely a false positive, which is common for multilingual writers.

Why Non-Native Writers Need Better Tools, Not More Tricks

The truth is simple: most AI detectors were not built for multilingual writing. They are not language-neutral, and they make predictable mistakes with non-native patterns.

Translation doesn’t fix this.

Free humanizers often make it worse.

And rewriting everything in a “perfect” academic tone is the fastest way to get flagged.

Better tools are not about cheating detection. They’re about making sure the system reads your writing fairly. For many students, this saves time, stress, and unnecessary rewrites at the end of the term.

Until AI detection becomes truly multilingual, ESL students deserve tools and workflows that protect their authentic writing voice, rather than erase it.

Frequently Asked Questions

Why do AI detectors often make mistakes with ESL writers?

Most detectors are trained primarily on texts written by native English speakers. If your text does not match those patterns, the system may mark it as AI.

What do detectors see as “suspicious”?

Short sentences, simple vocabulary, repeated transitions, and literal phrasing. These are normal for non-native English speakers but often misread as AI-like.

Some detectors also overreact to repeated sentence structures or predictable transitions.

What is a “false positive” and why is it a problem?

A false positive happens when a tool incorrectly labels human writing as AI-generated. Non-native writers experience this more often, which can put them in a position where they must “prove” authorship or defend original work.

Is Turnitin the most accurate AI detector for ESL writers?

Not really.

Turnitin claims high accuracy, but independent research shows problems: Turnitin detection has about a 4% false positive rate - meaning 1 in every 25 sentences written by humans gets wrongly flagged as AI. Turnitin says it trains its system on diverse data including ESL writing, but real-world results often differ.

Can I trust a detector if my text get a high “human score”?

Not completely. Detectors only give probabilities, not guarantees.

A high “human score” doesn’t guarantee the text is truly human-written. People can lightly edit AI drafts or mix human and AI text in ways detectors cannot reliably catch.

Do universities have special rules for ESL students?

Unfortunately, no.

Most institutions use the same rules and the same detection tools for all students, even though these tools are less accurate for multilingual writing. As a result, ESL students are flagged more often simply because their grammar or phrasing is different from native English patterns.