Key takeaways:

- AI scores are estimates, not verdicts. A high score doesn't prove you used AI, because structured writing, formal tone, or non-native English can all trigger false positives.

- The acceptable threshold depends on where you're submitting. In academic settings, anything above 20% can raise concerns.

- A high score is fixable, and JustDone makes it easier. Adding personal insight, rephrasing templated phrases, and varying sentence structure can lower your score significantly.

What is AI score, and why does this matter? In this guide, we'll learn about AI detection scores and how AI detectors work in detail.

When running your writing through an AI detector, you get an AI detection score back – 45%, 78%, 10%, etc. What does that actually mean? What percentage of AI is acceptable? What percent of AI detection is bad?

You will learn the correct AI score interpretation and learn how to read the results in the right context. Let's break it down together.

What Percentage of AI Is Acceptable?

There's no universal rule that says "X% is fine." But after working across platforms like Turnitin, GPTZero, and JustDone's AI Detector, I can give you some realistic benchmarks to work with.

According to JustDone's guide of AI score interpretation:

| AI Score Range | Interpretation | What You Should Do |

|---|---|---|

| 0–20% | Low likelihood of AI | Generally safe, but review anyway. Even human text can look robotic |

| 20–50% | Mixed signals | Review your writing voice. Edit overly formal or vague sentences. Add personal insight or specific examples |

| 50–80% | Likely AI-generated or heavily edited | Rewrite key sections. Make sure your work reflects your ideas and thought process |

| 80–100% | Strong AI signature | Avoid submitting this version. Rework content completely, or start fresh using your own words |

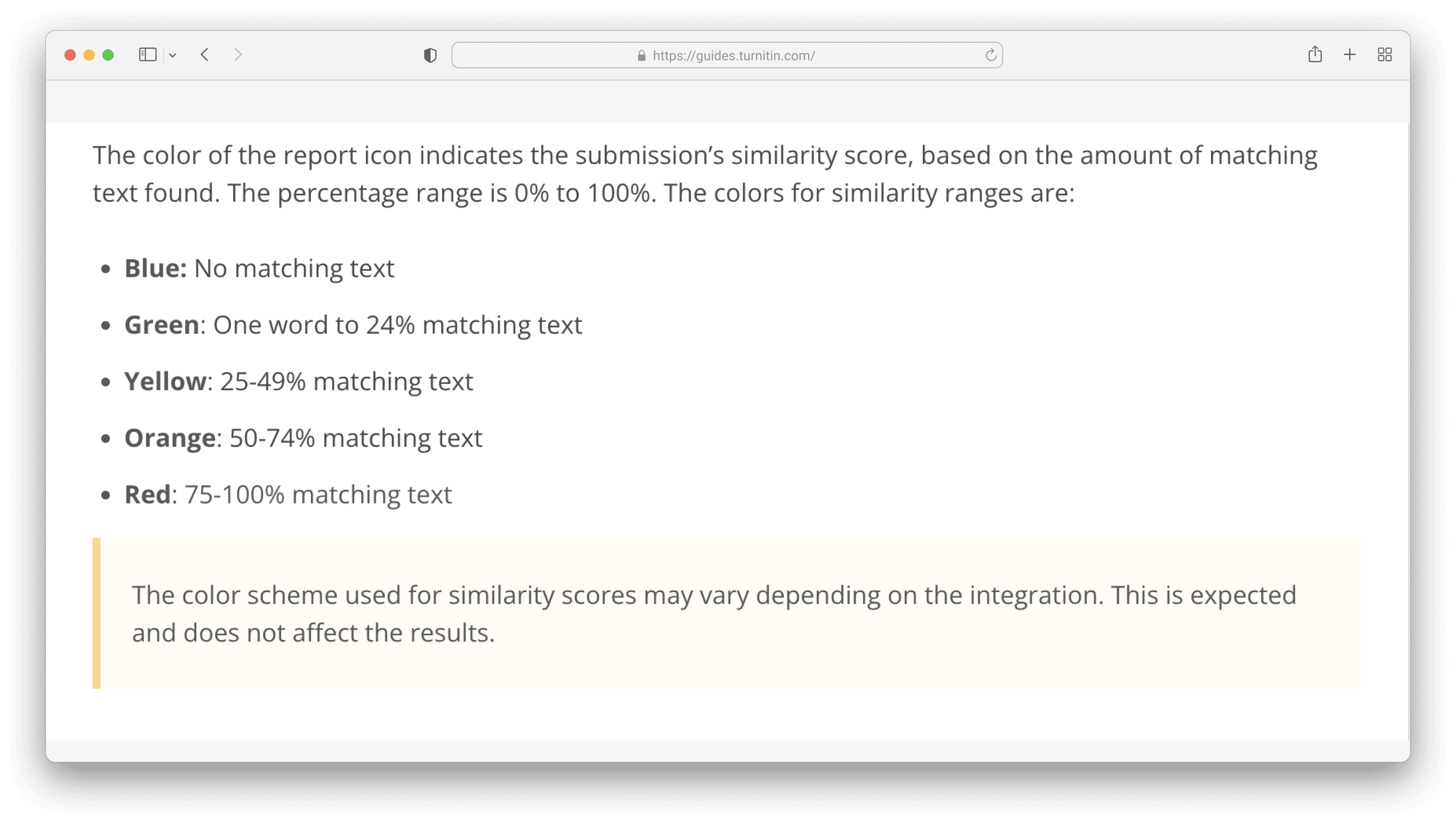

What percentage of AI is acceptable on Turnitin?

Turnitin doesn’t publish a universally accepted number because schools interpret it differently, but there’s one practical detail students should know: Some Turnitin-style reporting treats low AI percentages as less reliable and may not show detailed highlights until the score is higher.

For example, Turnitin’s ecosystem documentation (via iThenticate guidance) describes cases where scores in a low range may show an indicator rather than full reporting/highlighting (because the tool has lower confidence on small signals).

Here's what Turnitin's guide shows:

What students usually hear from instructors:

- Below 20%: good

- 20-50%: may trigger a conversation or manual review

- 50% and more: more likely to be questioned, especially without drafts/process proof

Bottom line: don’t treat Turnitin as a pass/fail number. Treat it as “will this raise a question?”

Note: Educators may interpret AI scores differently. Turnitin does not provide official thresholds.

How Accurate are AI Detection Scores

Scores vary per detector: GPTZero tends to cluster pure AI texts near 90-100%, Originality.ai covers a wide range with more confidence.

Let's compare average AI score rates per detector in detail:

| Detector | Approx. Accuracy %* | False Positive Rate | False Negative Rate |

|---|---|---|---|

| GPTZero | 80-98% | ~0-2% | ~30-35% |

| Originality.ai | 97-99% | <1% | <5% |

| Turnitin | ~86% | <1% (long texts) | ~14% (esp. hybrids) |

| JustDone | 95-99% | <1% | <7% |

*Accuracy percentages are estimates that vary significantly depending on text type and length.

What Is an AI Score

Your AI detection score is a metric that tells how likely your text was generated or influenced by artificial intelligence. How is it calculated? By scanning for patterns in sentence structure, vocabulary, and predictability, which are often found in AI-generated content.

However, these scores are estimates. They don't prove anything. They simply suggest that some parts of your writing resemble typical AI writing. And that's where incorrect predictions can happen: even your own original work might get flagged. Especially if you naturally write in a structured or too formal way. On the flip side, well-done AI editing could lower the score significantly.

Critical finding: Stanford study revealed that 20% of essays by non-native English speakers were wrongly flagged as AI-generated. They were compared to much lower rates for native speakers.

So before you panic, it's essential to know what your score is really telling you.

How to Lower Your AI Detection Score

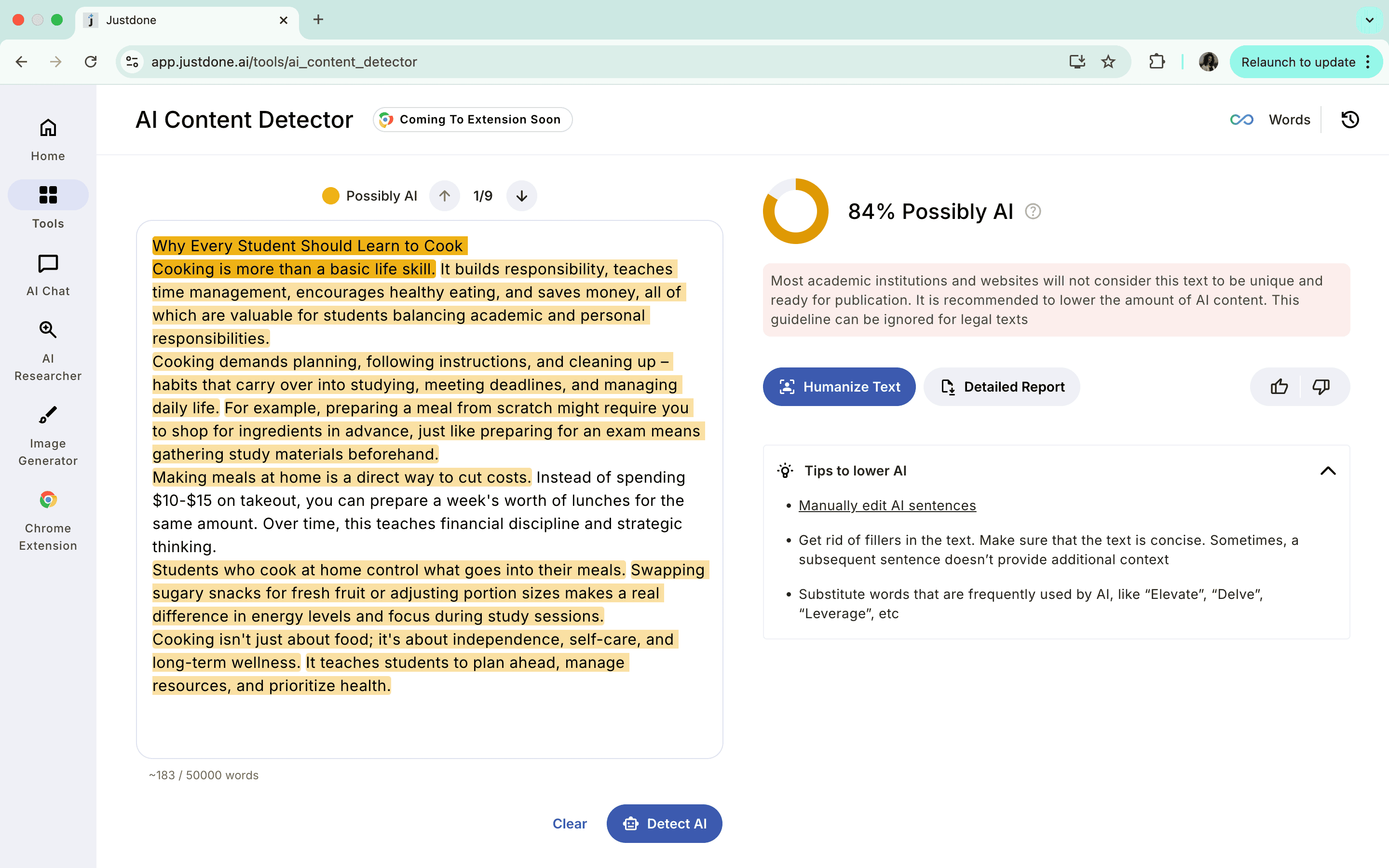

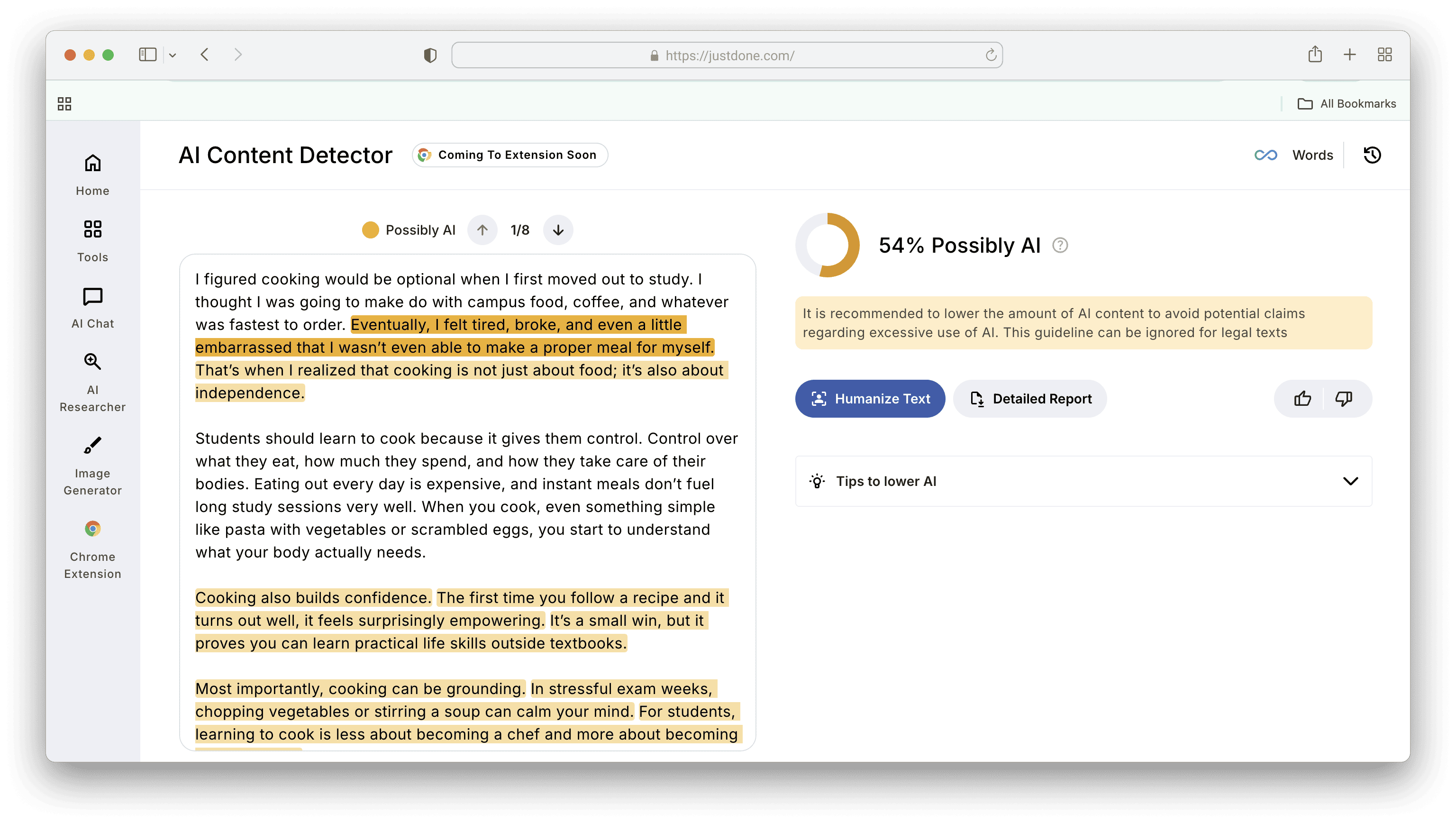

If your AI detection score is higher than you expected, it means your writing may look too automated. Here's what it can look like with a student's essay if you use JustDone's AI detector:

This essay shows an 84% AI detection score. While policies vary between institutions, such a high score would likely prompt additional review and discussion of your writing process and the sources you used. Remember, detectors are tools for conversation, not automatic judgment.

To lower the AI score, I recommend trying:

- Add personal reflection or experience. Even if it's an academic paper, inserting your own interpretation, examples, or experience can show your voice. AI usually lacks that nuance.

For instance, I added personal statements to the text about cooking I detected above and got 54% of AI detection score.

- Rephrase obvious, templated phrases. Sentences like "In today's fast-paced world…" or "Technology has changed our lives in many ways…" scream automation. Rewrite them to sound more like you. Find more AI words and phrases and tricks on how to replace them in my previous article about AI ethics.

- Avoid filler and wordy transitions. AI often uses overly long transitions or generic summaries. Get to the point, and vary your sentence length to sound more natural.

- Keep your sources visible. If you used AI to help summarize ideas, make sure to cite the original sources. This not only lowers suspicion but also shows academic integrity.

- Use AI Detector before submission. It highlights which parts of your text might trigger concern, so you can revise with confidence. It's a lot easier to fix a few paragraphs than to rewrite the whole thing later. Plus, JustDone offers a built-in AI Humanizer to make rewriting easier.

What is Considered AI Writing

Not all AI-assisted content is the same. The line between AI-generated and human-written is less black-and-white than most people assume. Here's how we break it down.

Classified as AI-generated:

- Text produced by AI and published without any editing

- Text produced by AI and edited by a human

- Human-written text that was heavily rewritten by AI

- Content built from an AI outline, then written and heavily edited with AI assistance

Classified as human-written:

- Human-written text with only light AI edits (roughly 5% or less of the content changed)

- Human-written text informed by AI research but written independently

- Content written and edited entirely by a human

The tipping point is how much of the final voice, structure, and language came from the human versus the machine. Light AI assistance, like a grammar fix, a rephrased sentence, or a research prompt, doesn't disqualify a piece from being genuinely human-written. But when AI shapes the majority of what's on the page, the content is AI-generated regardless of how much a human touched it afterward.

Comparing Popular AI Detection Tools for Students

When it comes to AI detection score checking, tools differ in what they show and how you can act on it. Here's a quick breakdown of how the most common AI detection tools stack up for student use:

| Tool | What It Shows | Best For | Limitations |

|---|---|---|---|

| JustDone AI Detector | Percent AI + flagged sentences + AI humanizer built-in | Students and all others revising for authenticity | More focused on clarity than enforcement |

| Turnitin AI Writing Detector | Percent flagged as AI + section highlighting | Academic institutions checking for policy violations | Not always transparent about how the score is calculated |

| GPTZero | Sentence-level probability + per-paragraph flags | Educators scanning quickly | Less intuitive for students to use |

| Originality.ai | Detailed AI probability + plagiarism detection + sentence highlighting | Professional content review | Subscription-required, can be expensive for individuals |

If you're serious about submitting clean, original work without surprises, JustDone's AI Detector offers the clearest guidance. You're not left guessing, you're editing with direction.

How to See Which Parts of Your Text Are Most Likely AI-Generated

An overall AI score is useful, but sentence-level detail is where the real insight lives. Once your scan is complete, JustDone's AI Detector highlights individual sentences based on how likely they are to be AI-generated, so you know exactly where to focus your edits.

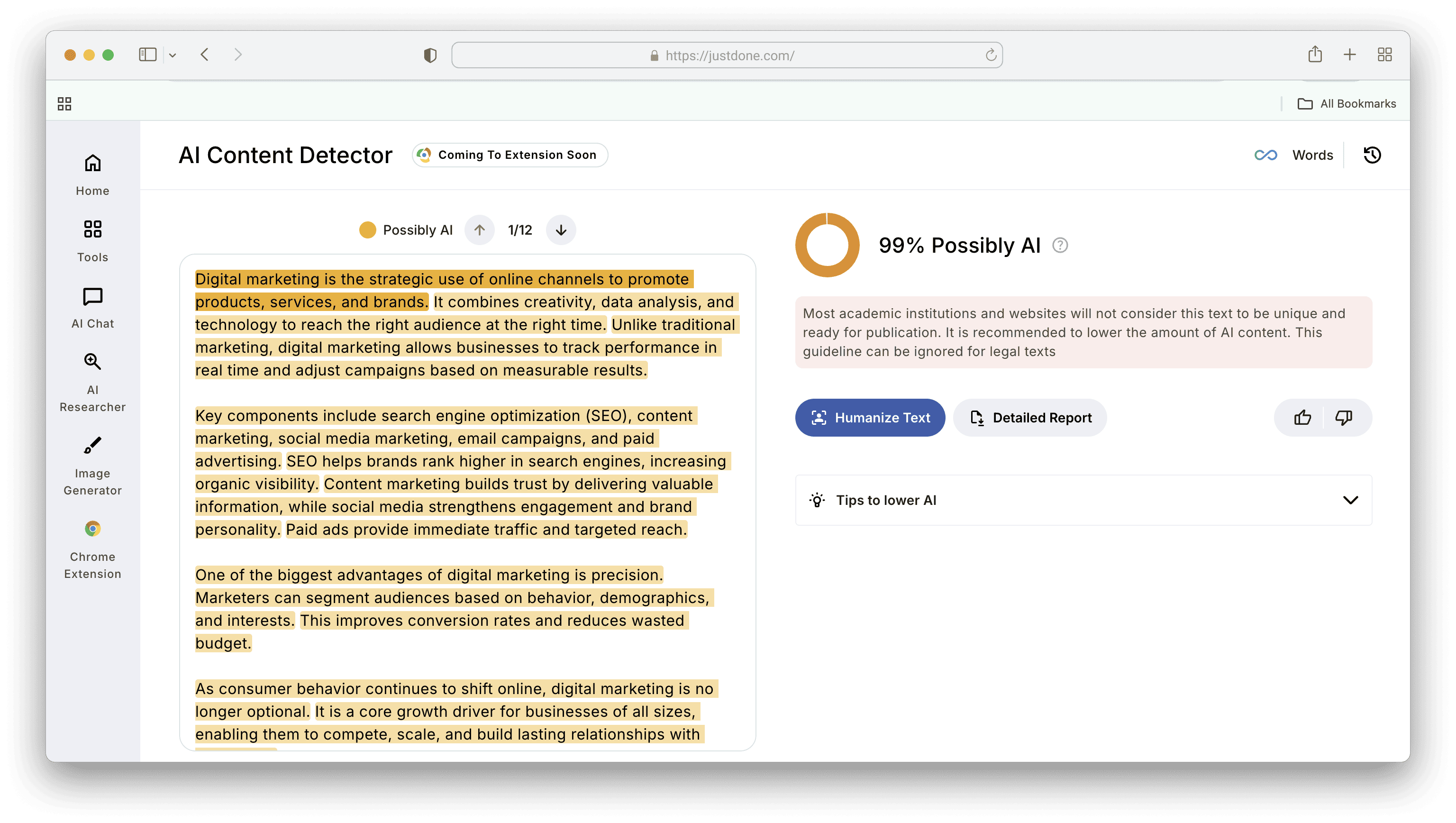

The highlighting works like this:

Orange and yellow highlights indicate sentences the detector flagged as likely AI-generated or heavily edited with AI.

To see this in action, we prompted ChatGPT to generate 100 words on digital marketing and ran the result through JustDone's AI Detector.

The AI-generated sentences were immediately flagged in orange and yellow, making it easy to see at a glance which parts of a text need attention and which are clear.

The AI-generated sentences were immediately flagged in orange and yellow, making it easy to see at a glance which parts of a text need attention and which are clear.

This kind of sentence-level visibility is especially useful when you're working with mixed content. For example, a draft that combines your own writing with AI-assisted sections. Rather than guessing which parts might be flagged, you can see them directly and revise with precision.

Wrapping Up on AI Detection Score

Your AI detection score is just a pattern analysis, not a judgment of your integrity. Even low scores can contain incorrect predictions, and high scores can be lowered with careful AI editing and authentic additions.

When you write something, ask yourself: Does this sound like something I would say? Or did I actually think this through, or just copy a suggestion? If asked, could I explain my choices? As long as you stay curious, stay honest, and use the right tools, you've got nothing to fear.

F.A.Q.

Are plagiarism scores and AI scores the same thing?

No. A plagiarism score measures how much of your text matches existing published content. An AI score measures how likely your text was generated by AI. A piece of writing can be fully original (never published anywhere) and still score high for AI. They measure different things and should be read separately.

Is JustDone's AI score accurate?

JustDone AI detector is a reliable tool with 94%-99% accuracy. JustDone uses deep language analysis and sentence-level highlighting to show exactly which parts triggered the score, not just a number. But treat results as a strong indicator, not a final verdict.

How does JustDone's AI detector work?

It uses machine learning models trained on both human and AI-generated text. When you submit content, it analyzes perplexity, burstiness, and sentence structure to estimate AI likelihood, then highlights the specific sentences most likely to be AI-generated.

What percentage of AI is acceptable? Is 40% AI bad?

It depends on context. In academic settings, most institutions flag any significant AI use — 40% would almost certainly raise concerns. For content marketing, thresholds are less defined but high scores can hurt search performance and credibility. If your content scores above 20%, it's worth revisiting the flagged sections.