You write your own essay, polish your poem, or comment your Python script carefully. You hit submit. Then, an automated system tells your teacher the work might be AI-generated.

Welcome to the world of AI detection failure or so-called false positives, where student work is misread by software that was never trained to understand context, creativity, or technical writing. These errors don’t just frustrate students. In fact, they damage trust, and in some cases, they result in academic penalties.

AI detection tools have become popular in schools, but their academic AI detection accuracy varies wildly depending on the type of content being tested. That’s not a glitch. It’s a fundamental weakness of how these content testing tools were trained.

In this article, you’ll see how false positives happen, which formats are most vulnerable (with examples), and how you can protect your work from unfair flags.

Not All Content Is Equal: Breaking Down How AI Detection Tools Fail

Detection tools like GPTZero or Turnitin's AI module analyze text using statistical patterns. They look for things like predictability, repetition, or sentence smoothness. When they find those traits, they assign a "likely AI" score.

But here's the catch: most well-written student work fits these patterns.

A concise summary, a carefully structured argument, a cleanly commented function — all of these can look like AI output. Especially when detectors haven’t been trained to distinguish between machine-generated prose and high-quality student submissions.

That’s why AI detection false positives are not isolated mistakes. They are the result of flawed assumptions built into the system.

Why AI Detection Tools Fail with Specific Content Types

Let’s talk about the most popular formats you probably use and what’s wrong with them that triggers so many false positives.

The first format that comes to mind is academic papers you write in school: essays, research papers, theses, reports, etc. Academic AI detection accuracy tends to break down when students follow rubrics too well. Structured paragraphs, formal tone, and balanced arguments are traits that many detectors associate with LLM output.

For instance, here's an example of text that most likely will be flagged by AI detectors:

"Global economic policy has shifted since 2008. Governments responded with coordinated stimulus packages, yet inequality continued to rise globally."

There’s nothing artificial here. Just standard academic structure. But because it’s polished and impersonal, detectors often misread it.

Tip: Use first-person reflection when appropriate. Include references to class readings or your own analysis.

Another important format to analyze is creative writing. This way, AI detection fails for the opposite reason. It flags anything that is too clean, too poetic, or too symbolic.

How do you find this example?

"The sky bruised itself into violet. Somewhere, a siren sang against silence. I didn’t move. I didn’t need to."

This could be a brilliant original line. Or a cliché. AI detectors don't know. If the language feels literary or stylized, they sometimes treat it as suspicious.

Tip: Break the structure. Use dialogue, abrupt transitions, or internal thought patterns. This adds human texture that AI doesn’t imitate well.

Did you know that poetry often gets flagged by AI checkers for being...too poetic? Poetry AI detection suffers because models are rarely trained on poems. Detectors expect long sentences with clear grammar. They don’t understand line breaks, repetition, or metaphor.

Let's look at this poem:

"I write in ink / but dream in graphite / afraid of permanence"

This kind of short-form poetry, especially if it rhymes or repeats imagery, can confuse detectors into assigning a high AI probability.

Tip: Submit an author’s note. A few lines about inspiration or structure can support your authorship if your poem is reviewed.

Perhaps, you will be surprised, but code and documentation can often be falsely flagged. Why? Because clean code looks like AI code. That’s the entire issue with AI code detection today. If your comments are short and clear, they resemble automated documentation. Even good variable naming can push the detector toward a false match.

See it in the example:

# Checks if user is logged in before displaying dashboard

def display_dashboard(user):

if not user.logged_in:

return redirect_to_login()

return render_dashboard(user)

There’s nothing AI about this. But it looks neat, and neat equals suspicious in many content testing tools.

Tip: Add notes on debugging, logic choices, or even failed attempts. AI rarely documents its mistakes. You do.

How to Handle Each Content Type

You can’t control what detectors do. But you can write and submit in ways that reduce the risk of being misread.

AI detectors are trained on general text patterns, but different content types have unique characteristics that can confuse these algorithms. Most AI detectors work by analyzing writing patterns, vocabulary choices, and structural elements.

Whatever you're writing, these strategies help establish that the work comes from a human author.

- Use more than one detection tool.

Cross-checking with multiple detectors helps you understand how different algorithms interpret your work and gives you a clearer picture of potential issues.

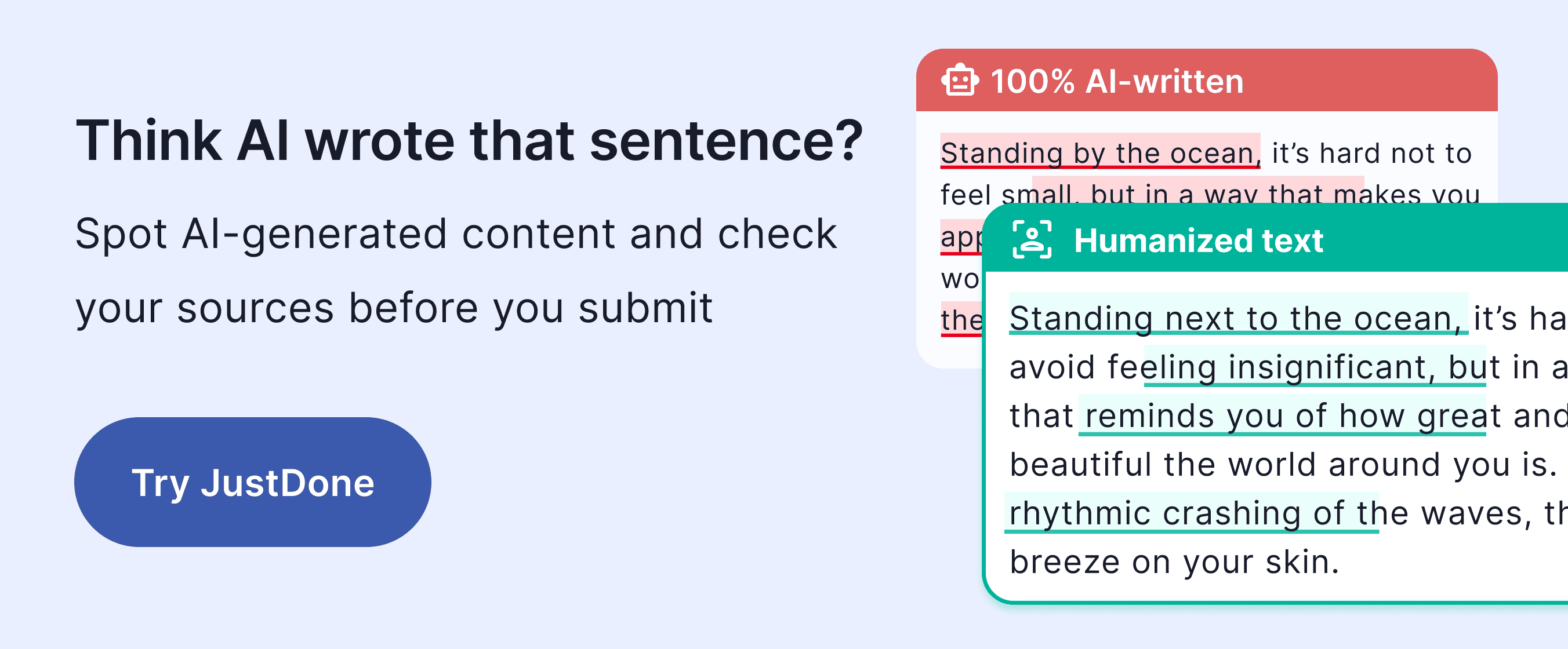

It works like this: Different tools make different guesses. If one flags you and another doesn’t, that’s useful data, especially if you're dealing with something sensitive like poetry or code. Cross-testing gives you more confidence. Try JustDone's AI detector made special for student writings and check the results. - Keep process history.

Keep evidence of your writing process: drafts, research notes, revision history, and timestamps. Google Docs shows edits. Overleaf tracks changes. GitHub or even timestamps in Notion can support your authorship if questioned. - Include commentary when needed.

Adding personal explanations and reasoning. Especially for code or experimental writing, a short explanation of what you tried and why you revised can help educators see your thinking. AI doesn't explain its logic. You can. - Avoid over-polishing.

Ironically, too much structure makes your writing look artificial. Detectors love uniform paragraphs and smooth transitions. If everything looks machine-perfect, it might get flagged. Vary your rhythm. Add a short sentence in between two longer ones. Let your own tone show. - Ask for manual review if flagged.

Request manual review when needed. When detectors give unclear results, a human review can help clarify things, so feel free to ask for one.

If a detector scores your work as likely-AI, ask your instructor for a second look. Be calm and be ready to show drafts, notes, and the steps you took. Most educators know these tools aren’t perfect.

Understanding your content's unique characteristics helps you work more confidently with detection tools.

The Universal Detector Myth: Tailored Strategies for Each Content Type

Different content types require different approaches because AI detectors aren't designed to handle the full spectrum of human writing. If a creative piece, a clean function, or a reflective journal entry can all be flagged, then AI detection are not random glitches. They are design limitations.

Students who follow the rules, apply feedback, and polish their work end up getting penalized because the tool expects sloppier writing from humans.

This leads to a chilling effect where students write to avoid flags, not to express themselves or explore the topic fully. That’s not good education. That’s algorithm anxiety.

To see just how frequent these errors are, check our deep dive on false positive rates, where we break down tool-by-tool detection trends and test results.

Conclusion: AI Detectors Fail, But You Need to Defend Your Writing

The best protection against false flags is transparency. Write your own work. Keep your drafts. Share your thinking. If a detector mislabels you, that’s not the end of the story.

The future of education shouldn’t be shaped by flawed models guessing at authorship. It should be shaped by students who know how to work with tools, not fear them. AI detection tools aren’t evil, but they’re also not reliable across all types of student work. They’re fast and scalable, but not nuanced. They guess based on structure, not substance. When your writing is creative, concise, or well-structured, you might trigger a false alarm.

You deserve to be judged by your ideas, not by how well your sentences fit into someone else's model of what “human” sounds like.

Keep writing with confidence. Machines may scan your work, but they don’t get to define it.