When I first started working with AI content detection tools, like AI writing checkers and plagiarism scanners, I believed they were fairly straightforward. They read text, analyze patterns, and decide if something looks human or machine-generated. But the deeper I got into the field, the more I realized there’s an entire layer of complexity most students and content creators don’t see. That layer is called adversarial AI, and it’s reshaping the way we think about content authenticity and detection. Here’s my guide on these issues and how to tackle them in content.

What Adversarial Attack Means

Adversarial AI refers to the techniques used to fool machine learning models. These aren't just technical experiments for research labs; they’re real-world manipulations that happen every day. When you hear about adversarial examples in AI detection, you’re hearing about ways to intentionally trick AI detectors into making the wrong decision. For example, someone might take an AI-generated essay and tweak it just enough that a detector classifies it as human-written, even though it’s not.

On the flip side, a student might write a paper by hand, only to have it mistakenly flagged as AI-generated because the text shares certain statistical patterns with machine output. That’s an adversarial failure too.

The problem is, AI detectors aren’t perfect. They rely on pattern recognition—things like predictability, sentence complexity, and word variation. But those patterns can be manipulated, either on purpose or by accident. I’ve worked with students who genuinely wrote their assignments but got flagged because their style was too clean, too formulaic, or too similar to AI-generated text. Others used tools like humanizers to rewrite their drafts and bypass detection systems. These are real examples of adversarial interactions with AI detectors, and they’re becoming more common.

Adversarial Examples in AI Detection: How They Work

So, how do these adversarial examples in AI detection actually work? Basically, AI detectors use machine learning models trained on large datasets of human and AI writing. They look for statistical markers that differentiate one from the other. But what happens when you intentionally or unintentionally change your text to confuse the model?

Imagine you write a paragraph using ChatGPT, then use a tool to swap out a few words, rearrange sentences, and add some randomness. Suddenly, the AI detector might not recognize the structure anymore. To the system, the writing no longer fits the typical AI pattern, even though the ideas and initial generation still came from a model. This is an adversarial attack, whether you meant it that way or not.

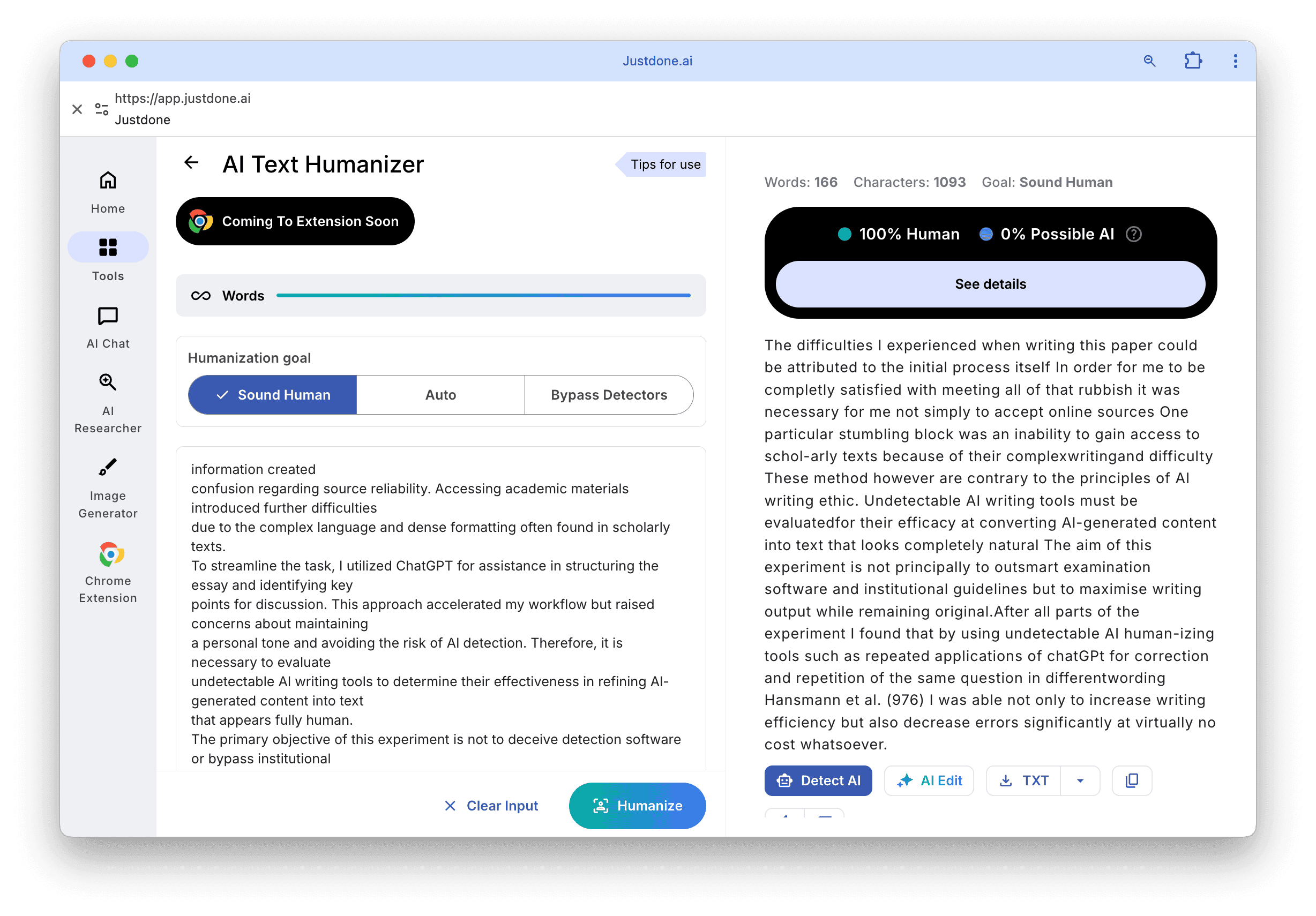

Some people do this deliberately to beat detection systems. Others stumble into it accidentally when they edit their text to sound more natural. For example, students who use paraphrasing tools like JustDone’s AI Humanizer are often trying to make their work sound authentic and personal. But these edits can also trick AI detectors, especially if the edits introduce variations that detectors aren’t trained to expect. The line between normal editing and adversarial manipulation can get blurry fast.

AI Detector Vulnerabilities: Types of Attacks That Affect Content Authenticity

The vulnerabilities of AI detectors fall into a few major categories, all of which impact content authenticity checks in real life. One type is known as perturbation attacks. This happens when someone makes small, barely noticeable changes to text that fool the AI system but not a human reader. You might adjust punctuation, swap synonyms, or add extra words that don’t change the meaning but confuse the model.

Another type is paraphrasing attacks. This is where you take machine-generated text and rephrase it enough times that the original AI fingerprints disappear. Some people do this manually, but most use automated tools. The danger here is that even detectors designed to spot AI content might miss paraphrased versions if the structure is sufficiently altered. Students don’t necessarily mean to exploit AI detector vulnerabilities, but when they ask ChatGPT to rewrite their draft multiple times, or when they feed the same text through different humanizers, the final version often escapes detection.

There’s also the issue of language translation. Some users translate text into another language and then back to the original. This process removes certain statistical patterns that AI detectors rely on, making the text harder to classify. I had a student from Germany who ran his AI-generated research summary through English to German and back to English again. The result was readable but awkward. Yet, surprisingly, the AI detector cleared it as 100% human because the pattern no longer matched the AI profile.

Personal vs. Organizational Protection Strategies

This is where the difference between personal and organizational protection strategies becomes crucial. For individual writers, the best defense is understanding how AI detectors work and taking steps to preserve your own writing voice. For organizations, however, the approach must include broader policy-making, clear guidelines on AI tool usage, and educating both students and employees about responsible AI-assisted writing. When institutions take these steps, they not only prevent accidental misuse but also help foster a culture of content authenticity and ethical collaboration with technology.

How Content Creators Can Navigate AI Detector Vulnerabilities

If you want to bypass AI detection tools such as Turnitin or GPTZero, you need to know that the truth is not to "cheat" detectors, but to understand how they work and why adversarial examples matter. The more you know, the better you can create authentic content without worrying about false flags or accidental issues.

First, remember that content authenticity doesn’t mean you just avoid AI detectors. Make sure your work reflects your ideas and effort. That’s why, if you are using AI tools to help with brainstorming, drafting, or editing, you need to be aware of how AI detectors interpret your text.

One practical tip from me is to check your drafts early using tools like the JustDone AI detector . This allows you to see what an AI checker might flag before you submit your work. If you spot sections that look too mechanical or repetitive, you can revise them naturally. The goal isn’t just to beat the system, but to produce writing that sounds like you.

Another tip is to avoid over-editing with automated humanizers. These tools are helpful, but if you run your text through too many layers of AI rephrasing, you might create an adversarial example without meaning to. The result could be content that fools detectors but feels off to your readers. Instead, combine light AI assistance with your own editing to preserve your voice.

For content creators who publish online, it’s also important to stay transparent. AI tools can be powerful partners in your creative process, but your audience values originality. If you use AI to generate drafts, explain your process. Building trust with the readers matters more than ever.

Conclusion: What Adversarial AI Means for the Future of Content Authenticity

Adversarial AI is not just a technical problem for engineers to solve. It affects everyday people who write essays, publish blogs, or create digital content. It matters for learners, freelancers, marketers, etc. I’d say the rise of adversarial examples in AI detection changes how we think about writing and originality.

AI detectors have vulnerabilities because they rely on patterns, and those patterns can be disrupted in subtle ways. When you know what triggers detection, you can create content that stays authentic while avoiding accidental red flags.

Tools like the JustDone AI detector are valuable because they help you verify your writing before it gets flagged by stricter institutional checkers. They offer a balanced way to check your content without penalizing creative use of AI support.